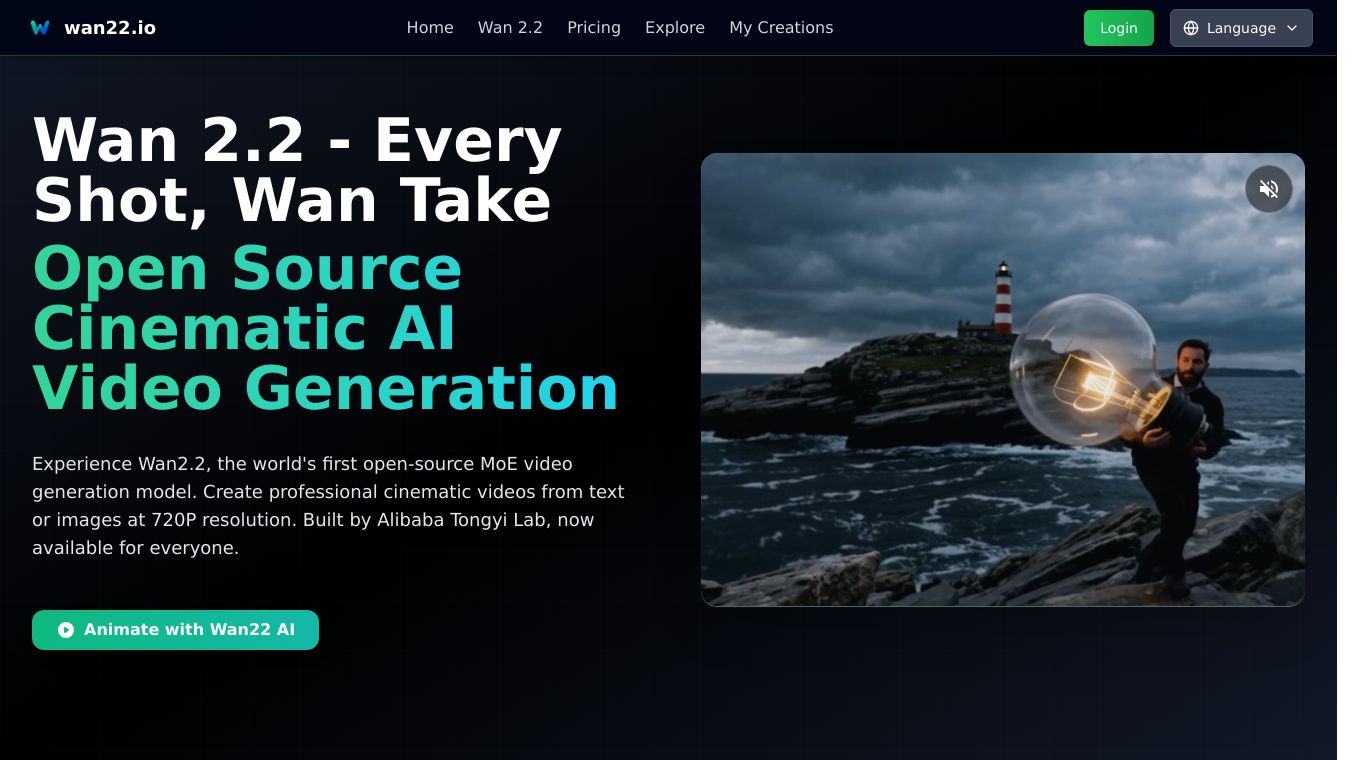

Wan2.2 Video generation

What is Wan2.2 Video Generation?

Wan2.2 is an open-source AI video generation model that allows users to create professional cinematic videos from text or images. Developed by Alibaba Tongyi Lab, Wan2.2 is designed to bring high-quality video production to everyone, offering advanced features and open-source accessibility.

Benefits

Advanced Motion Understanding

Wan2.2 excels at recreating complex motion with enhanced fluidity. Whether it's hip-hop dancing, street parkour, or figure skating sequences, the model ensures smooth and realistic movement.

Stable Video Synthesis

The I2V-A14B model in Wan2.2 delivers stable video synthesis with realistic physics and natural movement patterns. This makes it ideal for creating dynamic cinematic sequences from static images.

Cinematic Vision Control

Wan2.2 offers fine-grained control over lighting, color, and composition, allowing users to achieve professional cinematic narratives. This level of control is perfect for filmmakers and content creators seeking high-quality results.

Open-Source Accessibility

As an open-source model, Wan2.2 provides complete access to its source code and model weights. This transparency allows developers and researchers to customize and study the model, accelerating innovation in video generation.

Use Cases

Image-to-Video Transformation

With Wan2.2's I2V-A14B model, users can transform static images into dynamic cinematic sequences. This feature is particularly useful for creating engaging content from still images, reducing unrealistic camera movements for a more natural look.

Text-to-Video Creation

Wan2.2's advanced MoE architecture enables users to create stunning 720P videos from text prompts. This feature is ideal for content creators and filmmakers who want to bring their ideas to life with precise prompt following and sweeping motion control.

Enhanced Visual Creation

Wan2.2's optimized visual generation pipeline ensures that images created with the model are specifically designed for seamless video integration and animation. This makes it a valuable tool for animators and video editors.

Cinematic Enhancement Pipeline

Wan2.2 includes a cinematic enhancement pipeline that optimizes images for video generation. This pipeline ensures consistent high-quality video output, making it easier to achieve professional results.

Vibes

Developer and Creator Experiences

Alex Chen, an independent filmmaker, praises Wan2.2's open-source nature and cinematic control, stating that it allows for professional shot language without the need for expensive equipment.

Sarah Rodriguez, an AI researcher, highlights the revolutionary MoE architecture in Wan2.2, which has significantly accelerated her research in video diffusion models.

David Kim, a content creator, appreciates Wan2.2's 720P output quality and the absence of subscription fees, making it a cost-effective solution for high-quality video generation.

Emma Thompson, a video studio owner, has integrated Wan2.2 into her production pipeline, transforming their approach to pre-visualization with its aesthetic data training and cinematic control features.

TechReviewer_Pro, a technology analyst, describes Wan2.2 as a paradigm shift in AI video generation, setting a new standard for transparency and community collaboration.

CinematicAI_Dev, an open-source developer, finds the Wan2.2 codebase well-structured and appreciates the community support for implementing new features.

Additional Information

Open-Source Excellence

Wan2.2 is fully open-source, with complete access to source code and model weights available on GitHub. This commitment to open-source development benefits researchers and creators worldwide.

Professional Quality Output

Wan2.2 generates cinematic videos at 720P with 24fps, delivering outstanding quality that rivals commercial models. Its MoE architecture ensures high performance across key evaluation dimensions.

Advanced Cinematic Control

Wan2.2 provides fine-grained control over lighting, color, and composition, enabling users to create versatile styles with delicate detail. This advanced cinematic control is perfect for achieving professional results.

Getting Started

To get started with Wan2.2, users can download the models from GitHub, try the online demo, or access ready-to-use deployments on Hugging Face. Comprehensive documentation and community support are available to help users make the most of this powerful tool.

Wan2.2 represents a significant advancement in AI video generation, offering open-source accessibility, professional quality output, and advanced cinematic control. Whether you're a filmmaker, content creator, researcher, or developer, Wan2.2 provides the tools and flexibility to bring your creative vision to life.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.