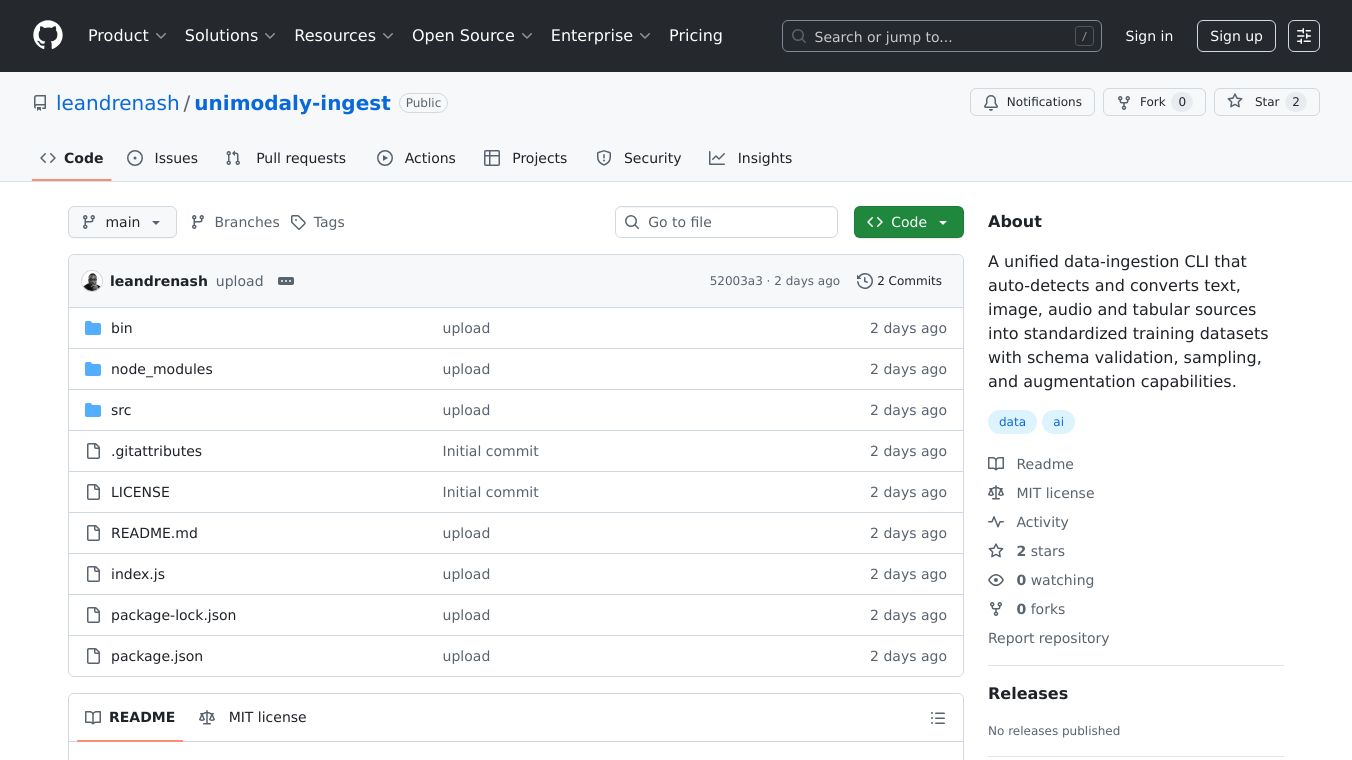

Unimodaly Ingest

Unimodaly Ingest is a powerful tool designed to simplify the process of preparing data for machine learning and other data-intensive applications. It automatically detects and converts various types of data, including text, images, audio, and tabular data, into standardized datasets. This tool ensures that the data is validated, sampled, and augmented, making it ready for use in different projects.

Benefits

Unimodaly Ingest offers several key advantages:

- Multi-modal Data Detection: It can handle multiple data types, including text, images, audio, and tabular data, making it versatile for different projects.

- Schema Validation: Ensures that the output datasets meet specific standards, reducing errors and improving data quality.

- Data Augmentation: Enhances the dataset by applying various augmentation techniques, which can improve the performance of machine learning models.

- Flexible Sampling: Allows users to control the size of the dataset by sampling, making it easier to manage large amounts of data.

- Multiple Output Formats: Supports exporting data in JSON, JSONL, or CSV formats, providing flexibility in how the data is used.

- Batch Processing: Efficiently processes large datasets, saving time and resources.

- Configuration Management: Offers customizable processing pipelines, allowing users to tailor the tool to their specific needs.

- Comprehensive Metadata: Provides rich metadata and feature extraction for each data type, enhancing the usability of the data.

Use Cases

Unimodaly Ingest is useful in various scenarios, including:

- Machine Learning: Preparing datasets for training machine learning models.

- Data Analysis: Processing and validating data for analytical purposes.

- Content Management: Managing and augmenting multimedia content for various applications.

- Research: Streamlining the data preparation process for research projects.

Installation

To install Unimodaly Ingest, run the following command:

npm install -g unimodaly-ingestQuick Start

Here are some basic commands to get you started with Unimodaly Ingest:

# Process all data in a directoryunimodaly-ingest ingest ./data --output ./processed# Process specific data types with augmentationunimodaly-ingest ingest ./images --type image --augment --output ./processed# Sample 50% of data and export to CSVunimodaly-ingest ingest ./data --sample 0.5 --format csv# Initialize configurationunimodaly-ingest config --initSupported Data Types

Unimodaly Ingest supports a wide range of data types, including:

Text Files

.txt,.md,.json,.xml,.html- Encoding detection and validation

- Language detection

- Text augmentation (synonym replacement, random operations)

Image Files

.jpg,.jpeg,.png,.gif,.webp,.svg,.bmp,.tiff- Metadata extraction (dimensions, color space, etc.)

- Feature extraction (intensity statistics, aspect ratio)

- Image augmentation (rotation, brightness, contrast, flipping)

Audio Files

.mp3,.wav,.flac,.ogg,.m4a,.aac- Audio metadata extraction

- Duration, sample rate, channel analysis

- Audio augmentation capabilities

Tabular Data

.csv,.tsv,.xlsx,.json- Schema inference

- Statistical analysis

- Data type detection

- Duplicate and null value analysis

Commands

Unimodaly Ingest provides several commands to manage data ingestion and processing:

ingest

Main command for processing data sources.

unimodaly-ingest ingest <input> [options]Options:*-o, --output <path>- Output directory (default: ./output)*-f, --format <format>- Output format: json, jsonl, csv (default: json)*-s, --sample <ratio>- Sampling ratio 0-1 (default: 1.0)*-a, --augment- Enable data augmentation*--schema <path>- Custom schema validation file*--config <path>- Configuration file path*-v, --verbose- Verbose output*-t, --type <types...>- Specific data types: text, image, audio, tabular*--batch-size <size>- Batch processing size (default: 100)

config

Manage configuration settings.

unimodaly-ingest config [options]Options:*--init- Initialize default configuration*--show- Show current configuration*--set <key=value>- Set configuration value

validate

Validate dataset against schema.

unimodaly-ingest validate <dataset> [options]Options:*--schema <path>- Schema file path

Configuration

Initialize a configuration file to customize processing behavior:

unimodaly-ingest config --initThis createsunimodaly.config.jsonwith settings for:* Data type specific processing options* Augmentation parameters* Output formats and compression* Performance settings* Schema validation rules

Example configuration:

{"text": {"encoding": "utf8","maxSize": "10MB","augmentation": {"enabled": false,"synonymReplacement": 0.1,"randomInsertion": 0.1}},"image": {"maxSize": "50MB","augmentation": {"enabled": false,"rotation": 15,"brightness": 0.2,"flip": true}}}Output Format

The CLI generates standardized datasets with rich metadata:

[{"type": "text","source": "/path/to/file.txt","timestamp": "2025-01-27T10:30:00.000Z","content": "processed content...","metadata": {"originalLength": 1500,"fileSize": 1024,"lines": 25,"words": 200},"features": {"wordCount": 200,"sentenceCount": 12,"language": "en"}}]Schema Validation

Define custom schemas for validation:

{"type": "array","items": {"type": "object","required": ["type", "source", "content"],"properties": {"type": {"type": "string","enum": ["text", "image", "audio", "tabular"]},"source": {"type": "string"},"content": {"type": ["string", "object"]}}}}Examples

Process Mixed Media Directory

unimodaly-ingest ingest ./media_folder \--output ./datasets \--format json \--augment \--sample 0.8 \--verboseText-Only Processing with Custom Schema

unimodaly-ingest ingest ./documents \--type text \--schema ./text_schema.json \--output ./text_dataset \--format jsonlImage Dataset with Augmentation

unimodaly-ingest ingest ./images \--type image \--augment \--batch-size 50 \--output ./image_datasetLicense

Unimodaly Ingest is released under the MIT License.

About

Unimodaly Ingest is a powerful tool for automating the data ingestion process, making it easier to prepare datasets for machine learning and other data-intensive applications. With its comprehensive feature set and flexible configuration options, it is suitable for a wide range of use cases.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.