Two-Step Contextual Enrichment

Two-Step Contextual Enrichment TSCE is a new way to make Large Language Models LLMs more reliable and clear. It does this by splitting the response process into two parts. This idea has been tested on models like OpenAI''s GPT-3.5/4 and Llama-3 8 B. TSCE helps models give better answers by separating the thinking part from the answering part. This reduces mistakes like wrong information and formatting errors without needing extra training.

Benefits

TSCE has several good points:

* It works with existing models like GPT-3.5 and GPT-4 without needing extra adjustments.

* It makes answers more reliable by separating the thinking and answering parts.

* It is compatible with both OpenAI and Azure OpenAI APIs, making it easy to use in current workflows.

* It adds only a small amount of extra time but greatly improves the clarity and accuracy of responses.

Use Cases

TSCE can be used in many situations where accurate and reliable responses from LLMs are needed. For example:

* Improving math, calendar, and formatting tasks in models like GPT-3.5-turbo.

* Reducing rule violations in models like GPT-4.1.

* Enhancing accuracy in tasks that require step-by-step reasoning in models like Llama-3 8 B.

Vibes

TSCE has shown big improvements in various tests. For instance, it has improved performance in math, calendar, and formatting tasks in GPT-3.5-turbo. It has also reduced rule violations in GPT-4.1 and enhanced accuracy in tasks requiring chain-of-thought reasoning in Llama-3 8 B.

Additional Information

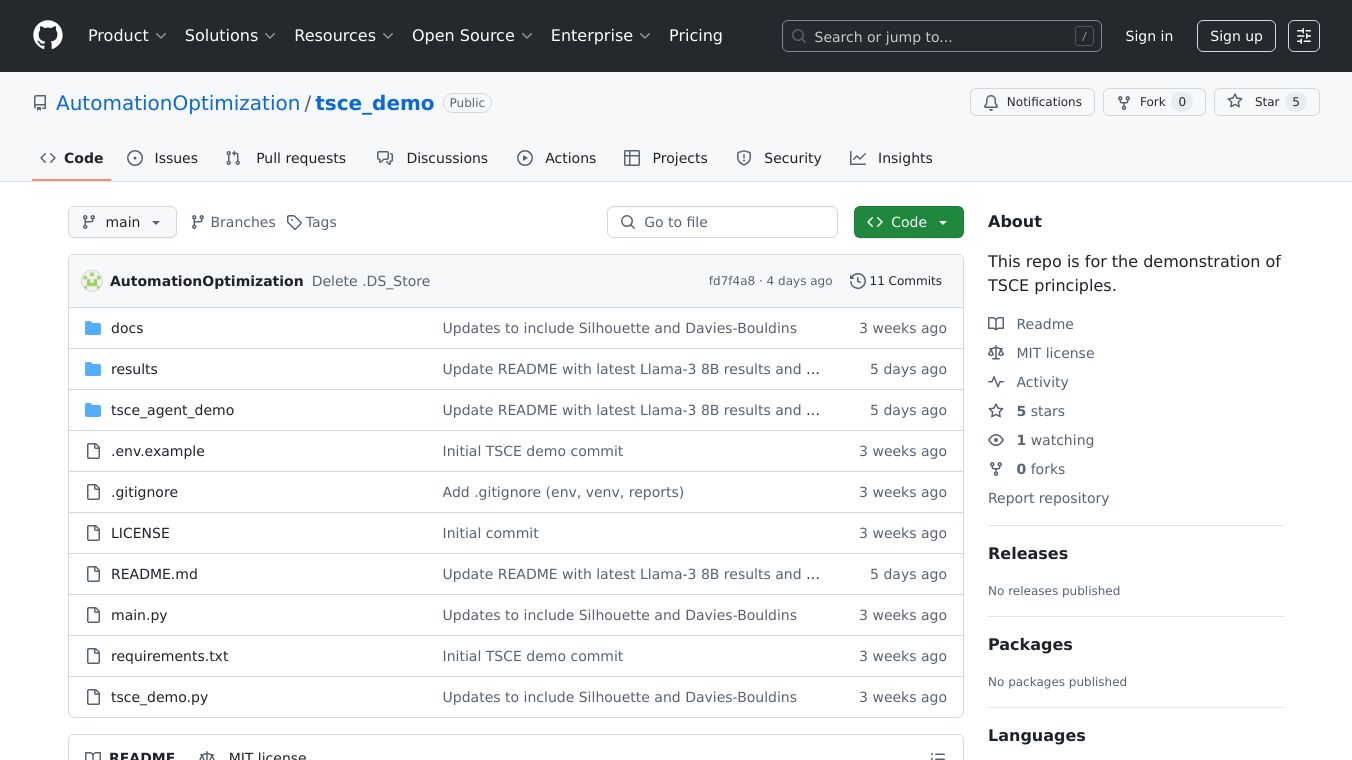

TSCE is open-source and available on GitHub. It is licensed under the MIT License, which means it is free for both commercial and private use. The project welcomes contributions from the community. Users can install TSCE using pip and start using it with a simple Python script. Customization options are available for tailoring the system instructions to specific needs.

Comments

Please log in to post a comment.