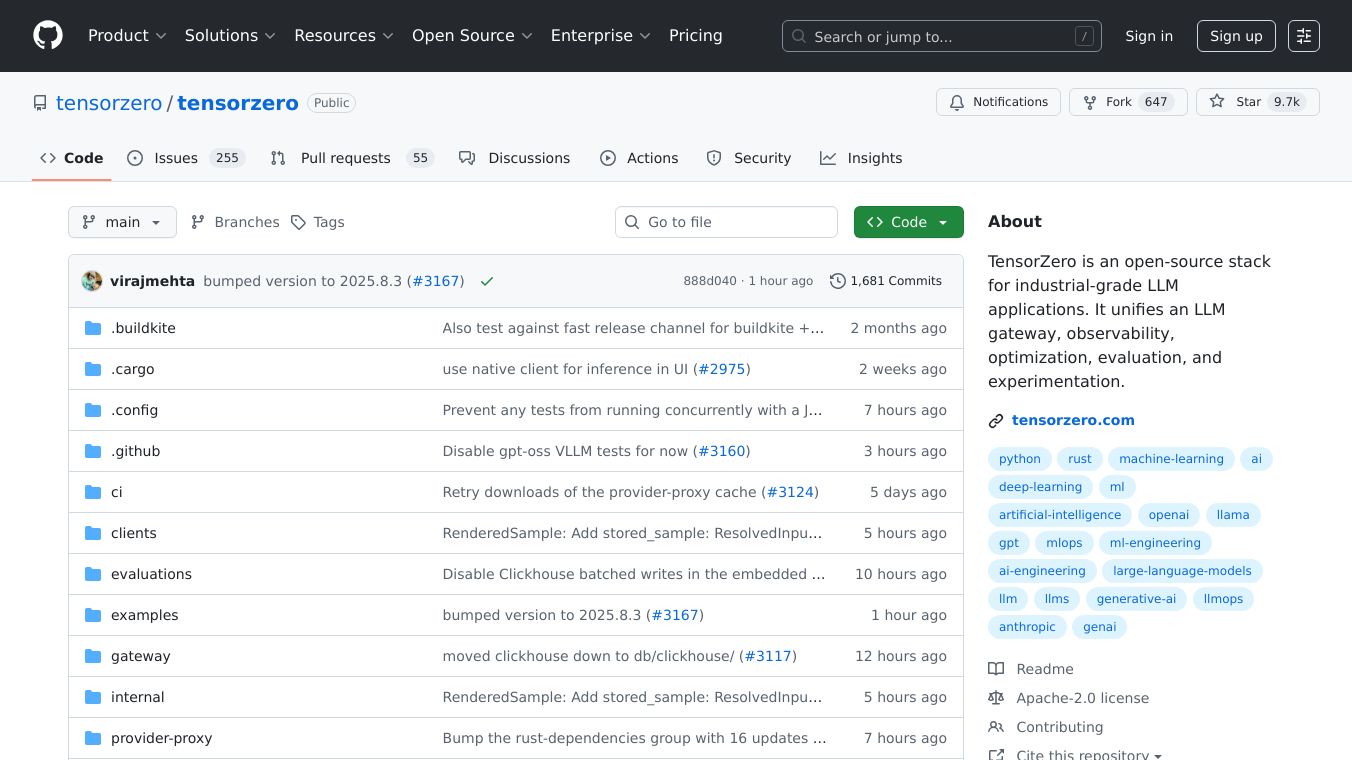

TensorZero

TensorZero is an open-source stack designed for industrial-grade LLM applications. It unifies several key components: an LLM gateway, observability, optimization, evaluation, and experimentation. This comprehensive toolset is built to support the entire lifecycle of LLM applications, from development to deployment and continuous improvement.

Benefits

TensorZero offers a range of benefits for developers and organizations working with LLM applications. Its unified API allows access to every major LLM provider, whether through APIs or self-hosted solutions. The tool's high performance, with less than 1ms p99 latency overhead at 10k+ QPS, ensures efficient and reliable operations. TensorZero's robust observability features enable users to store inferences, debug API calls, monitor metrics, and build datasets for various workflows. The tool also supports model optimization through fine-tuning, prompt optimization, and inference strategy optimization. Additionally, TensorZero provides evaluation features for static and dynamic assessments, as well as built-in A/B testing for experimentation.

Use Cases

TensorZero can be used in various scenarios, such as optimizing data extraction pipelines, building multi-hop retrieval agents, fine-tuning models for specific tasks, and enhancing LLM performance through best-of-N sampling. Its flexibility and comprehensive feature set make it suitable for complex LLM applications and workflows. TensorZero is designed for incremental adoption, allowing users to start with a simple OpenAI wrapper and scale up to a production-ready LLM application with observability and fine-tuning in just 5 minutes. The tool supports every major programming language and integrates with a wide range of model providers.

Additional Information

TensorZero is 100% self-hosted and open-source, with no paid features. It provides a variety of examples demonstrating its capabilities, such as optimizing data extraction pipelines, building multi-hop retrieval agents, fine-tuning models for specific tasks, and enhancing LLM performance through best-of-N sampling. These examples illustrate TensorZero's ability to handle complex LLM applications and workflows.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.