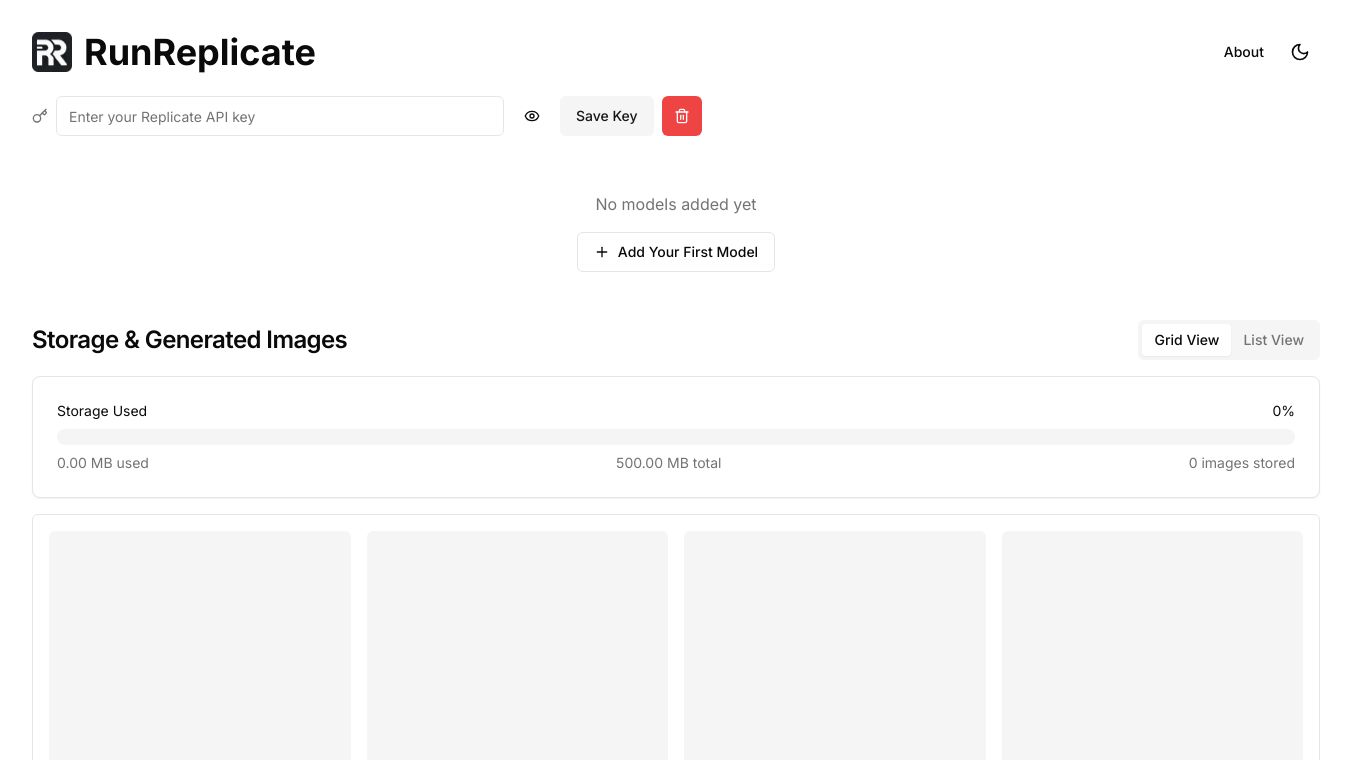

RunReplicate

RunReplicate is a modern platform that makes deploying and managing machine learning models in the cloud easy. Founded in 2019, it offers tools and services that let developers run and fine tune open source models without needing complex infrastructure. One of its standout features is the pay per use model, which charges users by the second for fine tuning and inference tasks.

Key Features

Custom Model Deployment

RunReplicate uses Cog, an open source tool, to deploy custom models. Cog creates an API server and deploys it on a large cloud cluster. This service adjusts based on demand, ensuring users only pay for the compute resources they use.

Model Hosting and Discovery

RunReplicate is both a model hosting and discovery platform. It has a library of open source models that developers can run with just a few lines of code. The platform automatically generates an API server for custom machine learning models, making it easy to deploy and manage them in a production ready environment.

Benefits

RunReplicate simplifies the deployment process with tools like Cog and Brev. Cog helps run production ready containers for machine learning, while Brev finds and provisions AI ready instances across various cloud providers. This makes the platform accessible and efficient for developers.

Use Cases

To deploy a model using RunReplicate and Brev, follow these steps.

- Create a Brev.dev Account. Ensure you have a payment method or cloud connect set up.

- Find the Model and Cog Container. For example, use joehoover''s musicgen model from RunReplicate. Copy the run command from the model''s RunReplicate page.

- Configure the Brev Instance. Create a new Brev instance, add an install script, and enter the run command to deploy the model.

- Select GPU and Deploy. Choose the desired GPU, name the instance, and create it.

- Expose Your Model. Share the service and configure access settings to make the model API private or public.

Cost/Price

As of May 2023, RunReplicate''s pricing for different GPUs is as follows.

T4 1.98 dollars per hr

A100 40GB 8.28 dollars per hr

A100 80GB 11.52 dollars per hr

In comparison, similar GPUs on Google Cloud are priced as follows.

T4 0.44 dollars per hr

A100 40GB 3.67 dollars per hr

A100 80GB 6.21 dollars per hr

While RunReplicate''s pay per use model offers flexibility, it may incur additional cold start costs, which can increase latency. For high volume predictions or fine tuning, using dedicated infrastructure like Google Cloud, AWS, or Lambda Labs might be more cost effective.

Funding

RunReplicate launched out of stealth with 17.8 million dollars in venture capital backing, including a 12.5 million dollars Series A round led by Andreessen Horowitz. The company has seen significant growth, with a 149% month over month increase in active users and a 125% growth in API calls since mid 2022. Notable enterprise customers include Character.ai, Labelbox, and Unsplash.

Reviews/Testimonials

RunReplicate stands out as a powerful and user friendly platform for deploying and managing machine learning models. Its focus on simplifying the deployment process and offering a pay per use model makes it an attractive option for developers and enterprises alike. As the demand for generative AI continues to grow, RunReplicate is poised to play a significant role in the future of AI deployment.

Comments

Please log in to post a comment.