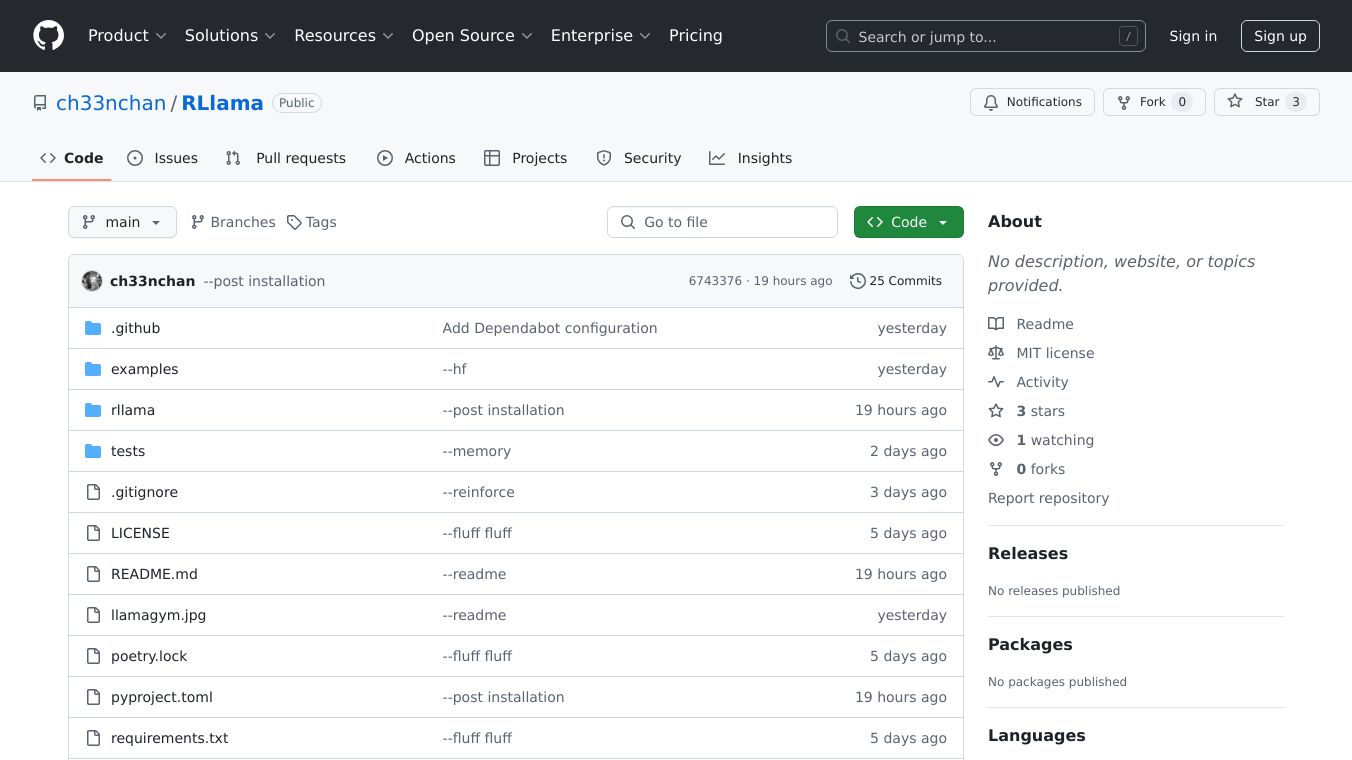

RLLama

Meet RLLama, a powerful tool designed to run large language models like Llama 3.3 right on your local machine. Whether you are using macOS, Linux, or Windows, RLLama has you covered. This means you can harness the power of advanced language models without needing cloud services.

Key Features

RLLama stands out with its Rust implementation, inspired by a CPU version that could handle GPT-J 6B models. This makes it a high-performance option for running LLaMA models on your CPU. The performance varies based on the model size and your hardware. For instance, on an AMD Ryzen 3950X, the LLaMA-7B model processes tokens in about 552ms using f16 precision. Add OpenCL with a GTX 3090 Ti, and that time drops to around 247ms per token.

Setting up RLLama is straightforward. It is available on crates.io and can be installed using cargo. Just make sure you have Rust and cargo ready to go. The implementation uses nightly Rust, so you will need that version installed. You will also need to download the LLaMA-7B weights and tokenizer model from the official repository.

Benefits

One of the biggest advantages of RLLama is its flexibility. You can adjust settings like temperature, top-p, top-k, and repetition penalty using command line arguments. Plus, you can enable OpenCL with a feature flag for even better performance.

Use Cases

RLLama is perfect for anyone looking to run large language models locally. It is especially useful for developers and researchers who need high performance without relying on cloud services. Whether you are working on natural language processing tasks or just experimenting with language models, RLLama offers a robust solution.

Cost/Price

The article does not provide specific cost or pricing information for RLLama.

Funding

The article does not mention any funding details for RLLama.

Reviews/Testimonials

The article does not include any user testimonials or reviews for RLLama. However, its performance benchmarks and features highlight its potential as a powerful tool for running large language models locally.

Comments

Please log in to post a comment.