Qwen3 Coder

Qwen3 Coder: Alibaba Cloud's Advanced AI for Code Generation and Comprehension

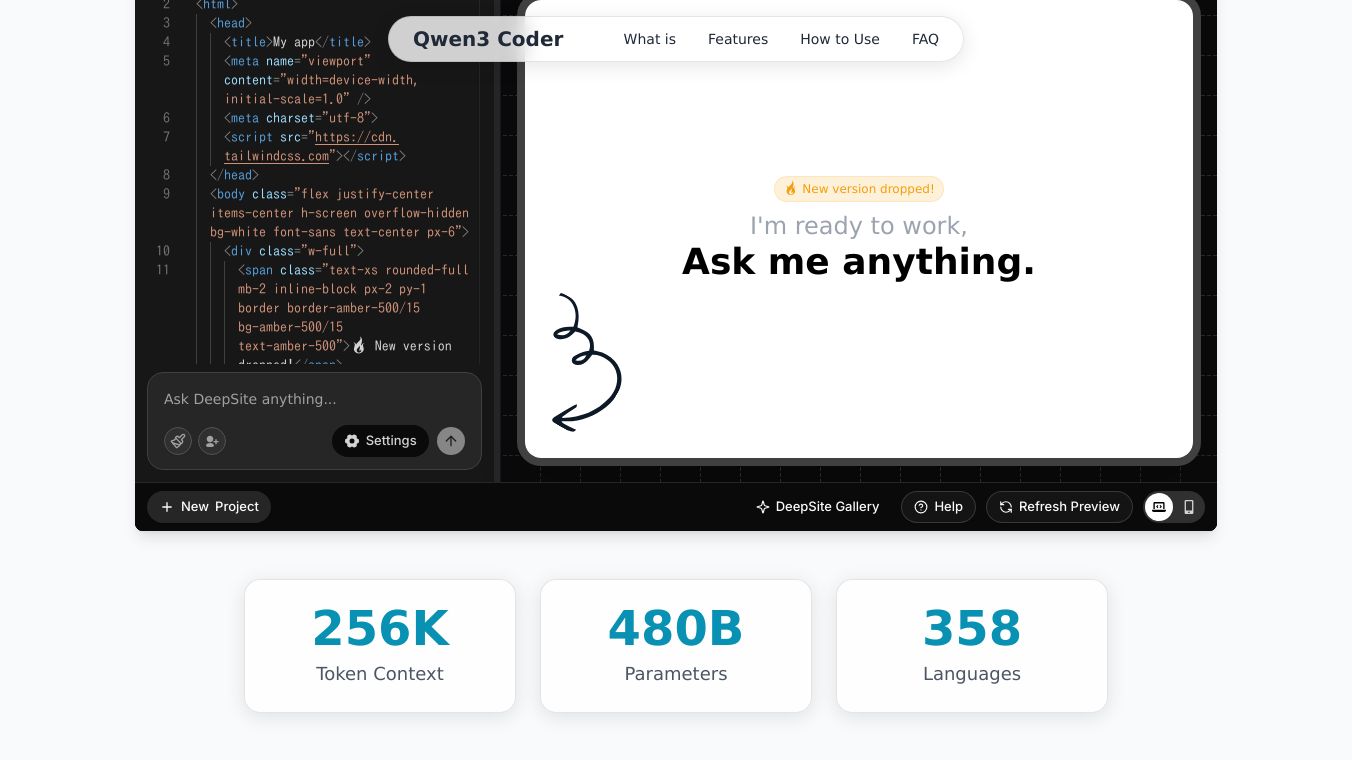

Qwen3 Coder is an advanced open-source AI model developed by Alibaba Cloud. It is designed for exceptional code generation, comprehension, and agentic task execution. With 480 billion parameters and a sophisticated Mixture-of-Experts (MoE) architecture, Qwen3 Coder is trained on 7.5 trillion tokens, with a strong focus on source code across 358 programming languages. This model achieves GPT-4 level performance while remaining completely open and accessible.

Benefits

Massive Model Architecture

Qwen3 Coder utilizes a massive MoE architecture with 480 billion total parameters distributed across 160 expert modules. During inference, only 35 billion parameters are active, ensuring high performance with computational efficiency. It features a 62-layer causal Transformer with grouped-query attention design, supporting a native 256K token context window, expandable to 1M tokens using Alibaba's advanced YaRN technique.

Extensive Training Data

Qwen3 Coder is pretrained on a massive corpus of 7.5 trillion tokens, with 70% dedicated to source code across 358 programming languages and file formats. The model leverages Qwen2.5-Coder for data cleaning, rewriting noisy code examples, and generating high-quality synthetic training data.

Revolutionary Training Methods

Qwen3 Coder is trained with millions of actual code execution cycles, rewarding the model based on whether its generated code runs and passes automated tests. It features long-horizon RL training using 20,000 parallel environment instances to teach multi-step coding workflows, tool usage, and iterative debugging.

Use Cases

Agentic Coding

Qwen3 Coder goes beyond code generation, planning, using tools, and self-debugging in multi-step workflows. It can perform complex, real-world development scenarios autonomously.

State-of-the-Art Performance

Qwen3 Coder achieves state-of-the-art results among open-source models, matching or exceeding the performance of leading proprietary solutions like GPT-4 on key coding benchmarks such as HumanEval.

Unprecedented Context Handling

With a native 256K token context window (expandable to 1M), Qwen3 Coder can handle entire codebases or multiple files in a single prompt, enabling complex tasks like repository-level refactoring and dependency analysis.

Polyglot Powerhouse

Qwen3 Coder supports an extensive range of 358 programming languages and file formats, from mainstream languages like Python, JavaScript, and Java to niche and esoteric languages like Haskell, Racket, and Brainfuck.

Advanced Reinforcement Learning Training

The model was trained using execution-driven reinforcement learning, rewarding code that not only looks correct but also runs and passes automated tests. This significantly improves the model's ability to produce correct, reliable code.

Open and Accessible

Qwen3 Coder is released under the Apache 2.0 license, permitting both academic and commercial use. This allows companies to freely integrate it into their products and services.

Pricing

Pricing details for Qwen3 Coder are not explicitly mentioned in the provided article. For the most accurate and up-to-date pricing information, it is recommended to visit the official Alibaba Cloud website or contact their sales team.

Vibes

Qwen3 Coder has received positive reviews for its state-of-the-art performance and extensive capabilities. Users appreciate its ability to handle complex coding tasks autonomously and its support for a wide range of programming languages. The model's open-source nature and accessibility have also been highlighted as significant advantages.

Additional Information

Qwen3 Coder represents a significant evolution from traditional code models like CodeLlama and StarCoder. It understands requirements, plans solutions, executes code, analyzes results, and iteratively improves. This evolution shows dramatic performance improvements, achieving ~85% on HumanEval. The model is available for cloud API access, local deployment, and integration with various developer tools. Community-provided quantized versions are also available for reduced hardware requirements.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.