PureRouter

PureRouter is a developer-first AI router designed to dynamically select the most cost-effective large language model (LLM) without compromising performance. It offers a range of features aimed at optimizing AI workflows, including cost savings, ease of integration, and scalability.

Key Features

Save More, Route Smarter

PureRouter automatically selects the best LLMs based on cost, latency, and output, potentially saving up to 64% without sacrificing performance.

Developer-First by Design

Integrate PureRouter in minutes with Python, test in a built-in playground, and connect any model without lock-in or headaches.

Built to Scale, Built for You

PureRouter offers transparent billing, smart routing, and upcoming custom model support, ensuring it grows with your stack.

Rethink How You Use LLMs

Whether scaling your AI stack or optimizing a single use case, PureRouter allows for smarter choices without rewriting your codebase.

Why Choose PureRouter?

PureRouter provides the freedom to route based on what you value, all within a single, developer-friendly interface, eliminating the need to choose between cost, speed, and performance.

Pay Only What You Use

PureRouter operates on a credit system, ensuring no subscription traps or surprise bills. Pricing is based on usage, making it transparent and straightforward.

Designed for Scalable Multi-Model AI Workflows

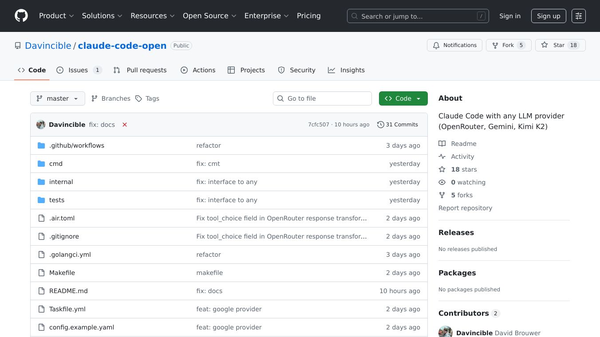

Open Source Model Deployment, Simplified

The streamlined Deployment Setup screen supports rapid integration of open-source LLMs. Future updates will include bring-your-own-model support, enabling direct deployment of custom models into your routing stack.

Multi-Model Workflows

Easily build dynamic AI pipelines by selecting from a growing list of LLMs. Configure routing across multiple models intuitively, balancing cost, latency, and accuracy based on your logic.

Performance That Pays Off

Save between 60% and 64% on AI inference costs without sacrificing performance.

Real-Time Testing, Future-Saving Insights

Quickly test prompts across multiple models and routing modes. Choose between speed, quality, or balance and compare latency and cost instantly. Future updates will include usage-based forecasting and visual comparisons to show savings, including monthly estimates, performance trends, and per-model cost and latency breakdowns.

Several Models and More on the Way

PureRouter supports a wide range of proprietary and open-source models, with more being added regularly.

Get Started in Minutes

No setup overhead. Install with pip, define your routing rules or plug into existing ones, and connect your models. PureRouter is designed for speed in development and production.

Questions? You'll Find the Answers Here

How Does Routing Work?

PureRouter is an AI routing layer that takes your input and automatically sends it to the best available LLM based on your defined logic or default rules. It is built to optimize cost, performance, or both.

Can I Use My Own Models?

Not yet, but this feature is on the roadmap. Soon, you will be able to bring and deploy your own model into the router environment.

What Models Are Supported Today?

PureRouter currently supports a wide range of models, including:- jamba-instruct-en- jamba-large-en- jamba-mini-en- llama3.1-8b- llama-3.3-70b- qwen-3-32b- command-r-plus- command-r- command- command-light- deepseek-r1-distill-llama-70b- llama-3.3-70b-versatile- llama-3.3-70b-specdec- llama-guara-4-12b- llama2-70b-4096- llama3-8b-8192- llama-3.2-3b-preview- llama-3.2-11b-text-preview- llama-3.2-90b-text-preview- llama3-70b-8192- llama-3.1-8b-instant- llama-3.3-70b-versatile- meta-llama/llama-4-scout-17b-16e-instruct- meta-llama/llama-4-maverick-17b-128e-instruct- mistral-saba-24b- gemma2-9b-it- moonshotai/kimi-k2-instruct- Meta-Llama-3.1-8B-Instruct- Meta-Llama-3.1-405B-Instruct- Llama-4-Maverick-17B-128E-Instruct- Meta-Llama-3.3-70B-Instruct- Qwen3-32B- DeepSeek-R1-Distill-Llama-70B- DeepSeek-R1- DeepSeek-V3-0324- meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo- meta-llama/Meta-Llama-3.1-70B-Instruct-Turbo- meta-llama/Meta-Llama-3.1-405B-Instruct-Turbo- meta-llama/Llama-3.3-70B-Instruct-Turbo- meta-llama/Llama-3.3-70B-Instruct-Turbo-Free- mistralai/Mixtral-8x7B-Instruct-v0.1- mistralai/Mistral-7B-Instruct-v0.1- meta-llama/Llama-4-Maverick-17B-128E-Instruct-FP8- meta-llama/Llama-4-Scout-17B-16E-Instruct- meta-llama/Llama-3.2-3B-Instruct-Turbo- Qwen/Qwen2.5-7B-Instruct-Turbo- Qwen/Qwen2.5-72B-Instruct-Turbo- deepseek-ai/DeepSeek-V3- deepseek-ai/DeepSeek-R1- mistralai/Mistral-Small-24B-Instruct-2501- moonshotai/Kimi-K2-Instruct- gpt-4-0613- gpt-4- gpt-3.5-turbo- davinci-002- babbage-002- gpt-3.5-turbo-instruct- gpt-3.5-turbo-instruct-0914- gpt-4-1106-preview- gpt-3.5-turbo-1106- gpt-4-0125-preview- gpt-4-turbo-preview- gpt-3.5-turbo-0125- gpt-4-turbo- gpt-4-turbo-2024-04-09- gpt-4o- gpt-4o-2024-05-13- gpt-4o-mini-2024-07-18- gpt-4o-mini- gpt-4o-2024-08-06- gpt-4o-2024-11-20- gpt-4o-search-preview-2025-03-11- gpt-4o-search-preview- gpt-4o-mini-search-preview-2025-03-11- gpt-4o-mini-search-preview- gpt-4.1-2025-04-14- gpt-4.1- gpt-4.1-mini-2025-04-14- gpt-4.1-mini- gpt-4.1-nano-2025-04-14- gpt-4.1-nano- gpt-3.5-turbo-16k- claude-opus-4-20250514- claude-sonnet-4-20250514- claude-3-7-sonnet-20250219- claude-3-5-sonnet-20241022- claude-3-5-haiku-20241022- claude-3-5-sonnet-20240620- claude-3-haiku-20240307- claude-3-opus-20240229- deepseek-reasoner- deepseek-chat- deepseek-r1- deepseek-v3- deepseek-coder- mistral-tiny- mistral-small- mistral-small-latest- mistral-medium- mistral-medium-latest- mistral-medium-2505- mistral-medium-2312- mistral-large-latest- mistral-large-2411- mistral-large-2402- mistral-large-2407- pixtral-large-latest- pixtral-large-2411- pixtral-12b-2409- open-mistral-7b- open-mixtral-8x7b- open-mixtral-8x22b- codestral-latest- codestral-2405- open-mistral-nemo- open-mistral-nemo-2407- devstral-small-2505- devstral-small-2507- devstral-medium-2507- magistral-medium-latest- magistral-medium-2506- magistral-small-latest- magistral-small-2506- sonar- sonar-pro- sonar-reasoning- sonar-deep-research- gemini-1.5-flash-latest- gemini-1.5-flash- gemini-1.5-flash-002- gemini-1.5-flash-8b- gemini-1.5-flash-8b-001- gemini-1.5-flash-8b-latest- gemini-2.5-flash-preview-05-20- gemini-2.5-flash- gemini-2.5-flash-lite-preview-06-17- gemini-2.0-flash-exp- gemini-2.0-flash- gemini-2.0-flash-001- gemini-2.0-flash-thinking-exp-01-21- gemini-2.0-flash-thinking-exp- gemini-2.0-flash-thinking-exp-1219- gemma-3-1b-it- gemma-3-4b-it- gemma-3-12b-it- gemma-3-27b-it- gemma-3n-e4b-it- gemma-3n-e2b-it- qwen/qwen3-32b

How Are Credits Calculated?

Credits are PureRouter's internal billing system designed to keep everything simple and transparent. You can use credits in two main ways:- Machine time: Each machine (compute unit) has an hourly credit rate. When you allocate a machine, credits are deducted based on the time it stays active.- Per-inference routing: If you're just routing queries, credits are charged per model call. Each model selection starts at as little as 0.001 credits per inference, depending on the model used and the tokens processed.During the beta, every new user receives $10 worth of free credits to explore the system. No card is required, and there are no subscriptions or hidden fees. This allows you to test both long-running deployments and per-query routing without worrying about surprises.

Is PureRouter Open-Source?

PureRouter is not currently open-source, but hybrid models are being considered. The first tool is fully open and will integrate nicely.

How Do I Deploy to Production?

PureRouter is production-ready out of the box. It works using a bring-your-own-key (BYOK) model, meaning you can connect your own API keys from providers like OpenAI, Mistral, Cohere, and others. From the dashboard, you can access the Access Keys section to add and manage your PureRouter API keys, set credit limits and usage restrictions for each key, and create separate keys for different environments or internal users. This setup provides full flexibility, usage control, and an easy path to securely integrate PureRouter into your production environment.

Be Part of Our Community

Discover what we think and what we are developing. Stay up to date with our latest news, events, and announcements.

@ 2025 PureAITerms of Service | Privacy Policy

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.