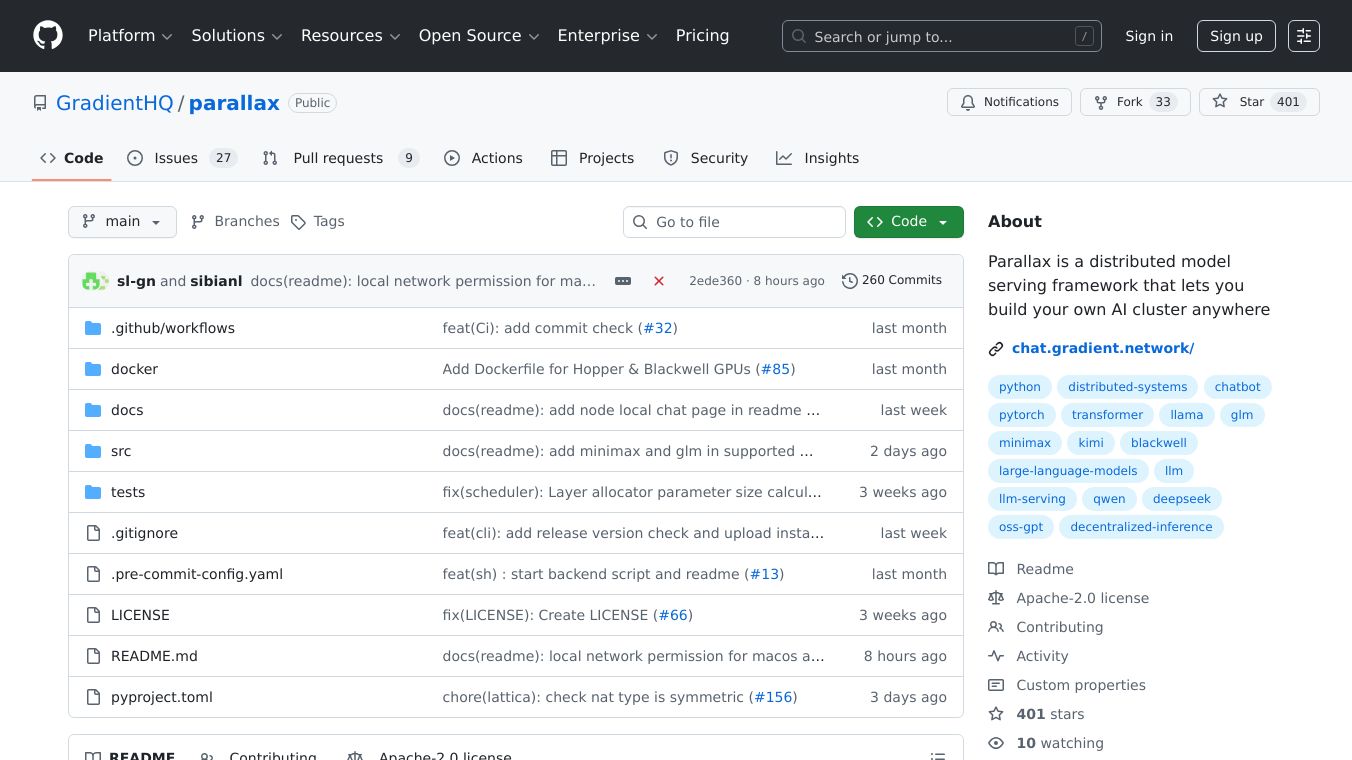

Parallax by Gradient

Parallax by Gradient is a powerful, decentralized framework that allows you to build and manage your own AI cluster. It is designed to be flexible and efficient, enabling you to host large language models (LLMs) on your personal devices. Whether you are a developer, researcher, or AI enthusiast, Parallax provides the tools you need to create a robust AI infrastructure.

Benefits

Parallax offers several key advantages that make it a standout choice for AI model serving:

- Decentralized Inference Engine: Parallax is fully decentralized, allowing you to host and manage AI models across multiple devices. This decentralization ensures high performance and reliability.

- Cross-Platform Support: Parallax supports a wide range of platforms, including Linux, macOS, and Windows. This makes it accessible to users regardless of their operating system.

- Pipeline Parallel Model Sharding: This feature allows you to split large models across multiple devices, enabling efficient use of resources and improving performance.

- Dynamic KV Cache Management: Parallax dynamically manages the key-value cache, ensuring optimal performance and reducing latency.

- Continuous Batching for Mac: This feature is specifically designed for macOS users, providing seamless and efficient batch processing.

- Dynamic Request Scheduling and Routing: Parallax dynamically schedules and routes requests to ensure high performance and efficient resource utilization.

- P2P Communication: The backend architecture includes peer-to-peer communication, which enhances the decentralized nature of the framework.

- GPU and MAC Backend Support: Parallax supports both GPU and MAC backends, providing flexibility and performance for different types of devices.

Use Cases

Parallax is versatile and can be used in various scenarios:

- Research and Development: Researchers can use Parallax to build and test AI models in a decentralized environment, enabling them to explore new ideas and innovations.

- Personal AI Assistants: Developers can create personalized AI assistants that run on their own devices, ensuring privacy and customization.

- Enterprise Solutions: Businesses can deploy AI models across their infrastructure, leveraging the decentralized nature of Parallax to improve performance and reliability.

- Educational Purposes: Educators and students can use Parallax to learn about AI model serving and decentralized systems, gaining hands-on experience.

Installation

Parallax is easy to install and can be set up in various ways, depending on your needs and preferences. Here are the main installation methods:

- From Source: You can install Parallax from source on Linux/WSL (GPU) and macOS (Apple silicon). The installation process involves cloning the repository and running a few commands.

- Windows Application: For Windows users, Parallax provides an .exe installer. After installing the .exe, you can start the installation by typing

parallax installin the terminal. - Docker: Parallax offers Docker images for quick setup on Linux+GPU devices. You can choose the appropriate Docker image based on your device's GPU architecture.

Getting Started

Once installed, you can start using Parallax by following these steps:

- Launch Scheduler: Start the scheduler by typing

parallax runin the terminal. To make the API accessible from other machines, add the argument--host 0.0.0.0. - Set Cluster and Model Config: Open the configuration interface and select your desired node and model config.

- Connect Your Nodes: Use the command

parallax jointo connect your nodes. For a public network environment, add the scheduler address with the-sflag. - Chat: Once your cluster is set up, you can start chatting with your AI model.

Uninstalling Parallax

Uninstalling Parallax is straightforward. For macOS or Linux, use the commandpip uninstall parallax. For Docker installations, use standard Docker commands to remove images and containers. For Windows, uninstall via the Control Panel.

Supported Models

Parallax supports a wide range of models, including:

- DeepSeek: Advanced large language models from Deepseek AI, designed for powerful natural language understanding and generation.

- MiniMax-M2: A compact, fast, and cost-effective MoE model built for advanced coding and agentic workflows.

- GLM-4.6: Improves upon GLM-4.5 with a longer context window, stronger coding and reasoning performance, and enhanced tool-use and agent integration.

- Kimi-K2: Moonshot AI's model family designed for agentic intelligence, available in different versions and parameter sizes.

- Qwen: A family of large language models developed by Alibaba's Qwen team, available in various sizes and instruction-tuned versions.

- gpt-oss: OpenAI's open-source GPT models, including gpt-oss-20b and gpt-oss-120b.

- Meta Llama 3: Meta's third-generation Llama model, available in sizes such as 8B and 70B parameters, including instruction-tuned and quantized variants.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.