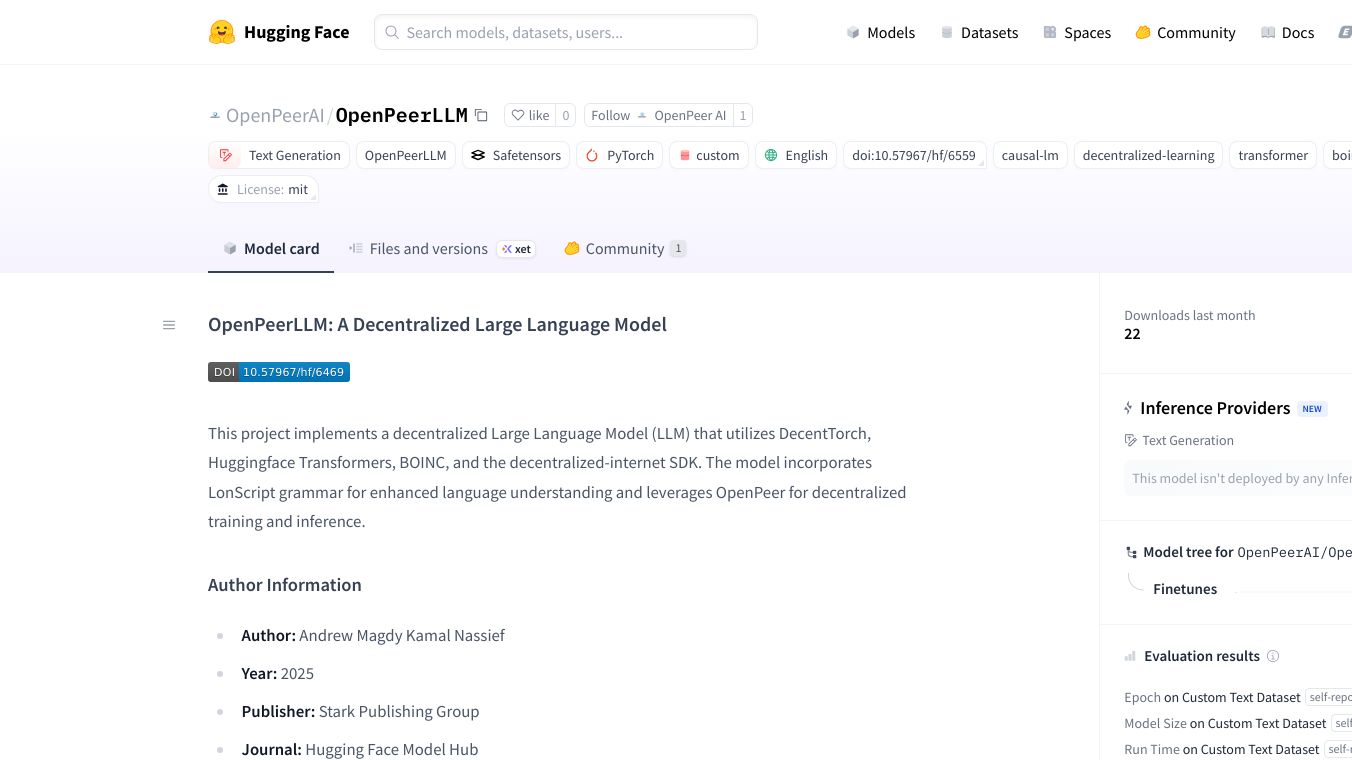

OpenPeerLLM

OpenPeerLLM: A Scalable Contextual LLM for Text Generation

OpenPeerLLM is a cutting-edge language model designed for grid-based learning and contextual text generation. It is the first non-fine-tuned base model in the OpenPeerAI family, which aims to revolutionize machine learning through decentralized, grid-based verifiable computing.

Benefits

OpenPeerLLM offers several key advantages:

- Decentralized Learning:By utilizing a peer network for distributed computing, OpenPeerLLM ensures efficient and scalable processing.

- Contextual Text Generation:The model excels at generating coherent and contextually relevant text, making it a powerful tool for various applications.

- Scalability:Designed to handle large-scale data, OpenPeerLLM can be expanded with more data and adjusted weights to enhance its capabilities.

Use Cases

OpenPeerLLM can be applied in a variety of scenarios:

- Content Creation:Generate high-quality, contextually relevant text for articles, reports, and more.

- Data Analysis:Process and analyze large datasets efficiently.

- Research and Development:Contribute to the advancement of machine learning and decentralized computing.

How to Download OpenPeerLLM

You can download the OpenPeerLLM model directly via HuggingFace or through Kaggle. Below is a sample code snippet to download the model using Kaggle:

importosimportkagglehubfrompathlibimportPathprint("Setting up model directories...")model_dir=Path('models/openpeerllm/checkpoints')model_dir.mkdir(parents=True,exist_ok=True)print("Downloading the model...")try:# Download model using kagglehubpath=kagglehub.model_download("openpeer-ai/openpeerllm/pyTorch/default")print(f"Model downloaded successfully at:{path}")# Print directory contentsprint("Checking downloaded content:")ifos.path.exists(path):print(f"Contents of downloaded model path:")foriteminos.listdir(path):print(f"-{item}")else:print("Model path not found")exceptExceptionase:print(f"Error during model setup:{str(e)}")This code will allow you to import the 'best-model.pt' as a base. The dataset utilized is limited, so you can expand it by importing more data, adjusting weights, and utilizing parameterization to enhance the model's capabilities.

Next Steps for OpenPeerLLM

The OpenPeerAI team has created a repository for further development and community contributions. They encourage users to explore the model, contribute to its improvement, and share findings with the community.

Additional Information

OpenPeerLLM is part of the OpenPeerAI project, which aims to become a significant player in the machine learning space by introducing decentralized, grid-based verifiable computing to scalable machine learning, LLMs, and reindexing. For more information, visit theOpenPeerAI repository on HuggingFace.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.