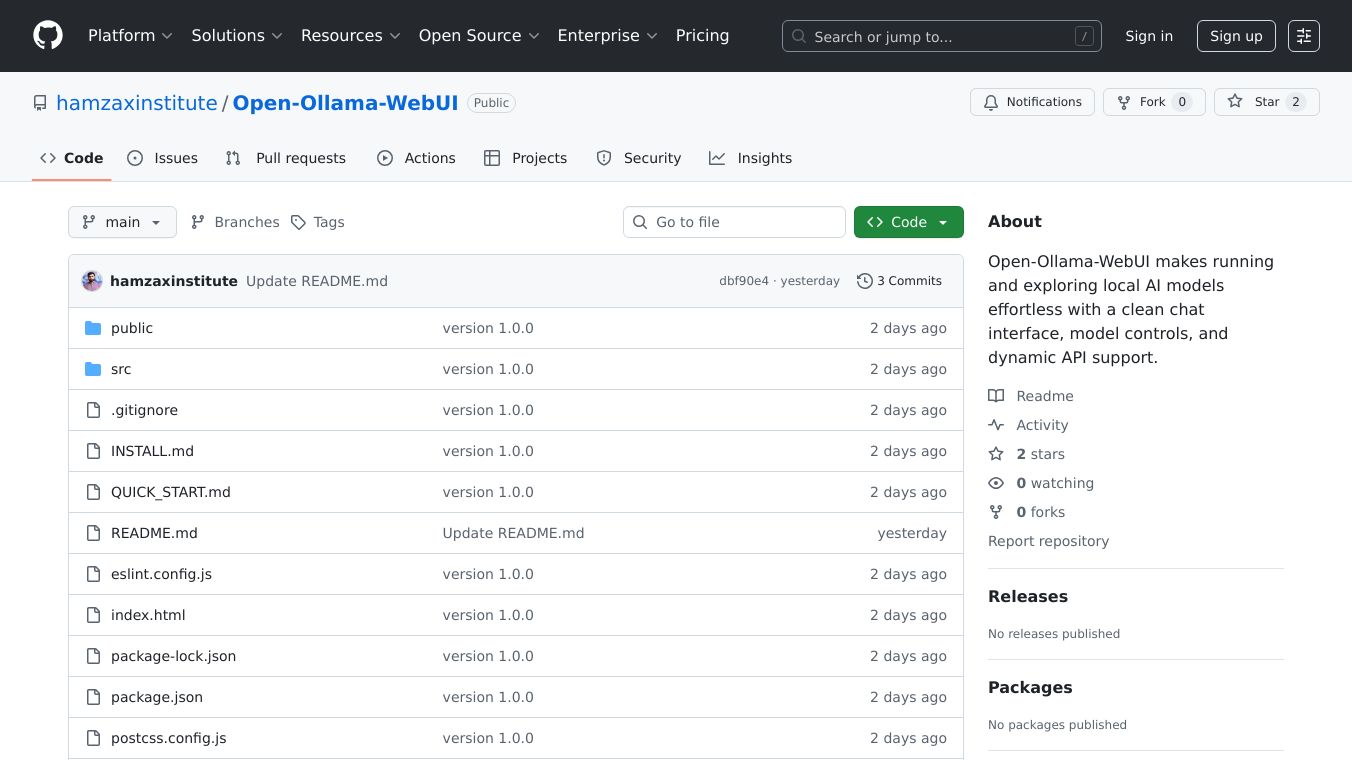

Open-ollama-webui

Open-ollama-webui: A Powerful AI Interface for Everyone

Open-ollama-webui is a user-friendly interface that brings the power of advanced AI models to your fingertips. It combines the capabilities of Ollama, which runs AI models locally on your machine, with the intuitive design of OpenWebUI. This combination offers privacy, control, and cost savings compared to cloud-based AI services.

Benefits

- Privacy and Control: Run AI models locally on your machine, ensuring your data stays private.

- Cost-Effective: Avoid subscription costs associated with cloud-based AI services.

- User-Friendly Interface: Enjoy an intuitive chat interface similar to ChatGPT, making it easy for anyone to use.

- Model Flexibility: Switch between different AI models or connect to OpenAI for a versatile experience.

- Enhanced Conversations: Upload files and use Retrieval-Augmented Generation (RAG) to get answers based on your own data.

- Customization: Add tools like web search, image generation, or data analysis to enhance your AI interactions.

- Tool Integration: Connect your AI to external tools and data sources like Figma, Obsidian, Gmail, and Blender through natural language.

Use Cases

- Developers: Build custom AI solutions with ease.

- General Users: Enjoy a ChatGPT-like experience without subscription costs.

- Data Analysis: Use AI to analyze and interpret data from your own files.

- Creative Projects: Generate images, design 3D models, and manage emails through natural language commands.

Pre-Reqs For Ollama Nvidia GPU Setup

- WSL2 Linux and Docker with Portainer: Ensure these are set up before proceeding.

- Nvidia CUDA Toolkit 12.5: Install this for WSL2 or the corresponding version for your Ubuntu distribution.

- Nvidia Container Toolkit: Required for CUDA support in Docker.

- Docker Configuration: Configure Docker to use the Nvidia driver.

- Nvidia-SMI: Add this tool to your PATH for GPU status information.

Configure OpenAI Integration in OpenWebUI

- Access OpenWebUI: Create a login account and navigate to the admin settings.

- Add OpenAI Connection: Enter the base URL (

https://api.openai.com/v1) and your API key. - Test the Connection: Verify the connection and test the API by starting a new chat and selecting an OpenAI model.

Configure Ollama (If Using Local AI)

- Manage Ollama API Connections: Confirm the connection configured as an environment variable in the Docker container setup.

- Test Ollama: Start a new chat, select a model like

llama3.1, and run a test prompt to ensure everything works.

Test Ollama

- Start a New Chat: Use the new chat button to begin a session.

- Select a Model: Choose a model from the dropdown menu and pull it if necessary.

- Run a Test Prompt: Ensure the model works by running a test prompt.

Additional Information

Open-ollama-webui is designed to be versatile and user-friendly, making advanced AI capabilities accessible to everyone. Whether you're a developer looking to build custom solutions or a general user seeking a cost-effective AI experience, Open-ollama-webui offers a powerful and intuitive interface to meet your needs.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.