OLMo 2 32B

OLMo 2 32B is a powerful new model in the OLMo series, created by the Allen Institute for Artificial Intelligence. It is fully open source, meaning all its data, code, and training details are available for anyone to use and build upon. This open approach is different from many other AI models that keep their details secret.

Benefits

OLMo 2 32B has 32 billion parameters, which help it perform really well. It can match or even beat models like GPT-3.5 Turbo and GPT-4o mini in various tests. This shows that open source AI models can be just as good as, or even better than, closed source ones.

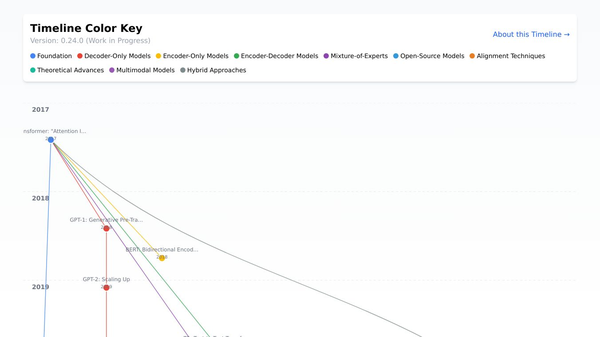

The model was trained in two main stages: pre-training and mid-training. During pre-training, it learned from a huge dataset of about 3.9 trillion tokens from different sources. This helped it understand a wide range of information. Mid-training focused on a high quality dataset called Dolmino, which included educational, mathematical, and academic content. This extra training made the model even smarter in these areas.

Use Cases

OLMo 2 32B can be used in many ways. Its strong performance makes it great for tasks that need a deep understanding of language and complex topics. It can help with educational tools, research projects, and any application that needs advanced AI capabilities.

Vibes

The AI community is excited about OLMo 2 32B. People see it as a big step forward for open source AI models. It shows that open models can compete with closed ones, which is a big change in how people think about AI development.

Additional Information

The release of OLMo 2 32B is a major achievement in making AI more open and accessible. By sharing all the details and making the model open source, AI2 is encouraging researchers and developers around the world to work together and push the boundaries of what AI can do. This collaboration is important for making AI better for everyone.

Comments

Please log in to post a comment.