Ollama LLM Throughput Benchmark

Meet the Ollama LLM Throughput Benchmark tool from aidatatools.com. This tool measures how well Local Large Language Models (LLMs) and Vision Language Models (VLMs) work.

Key Features

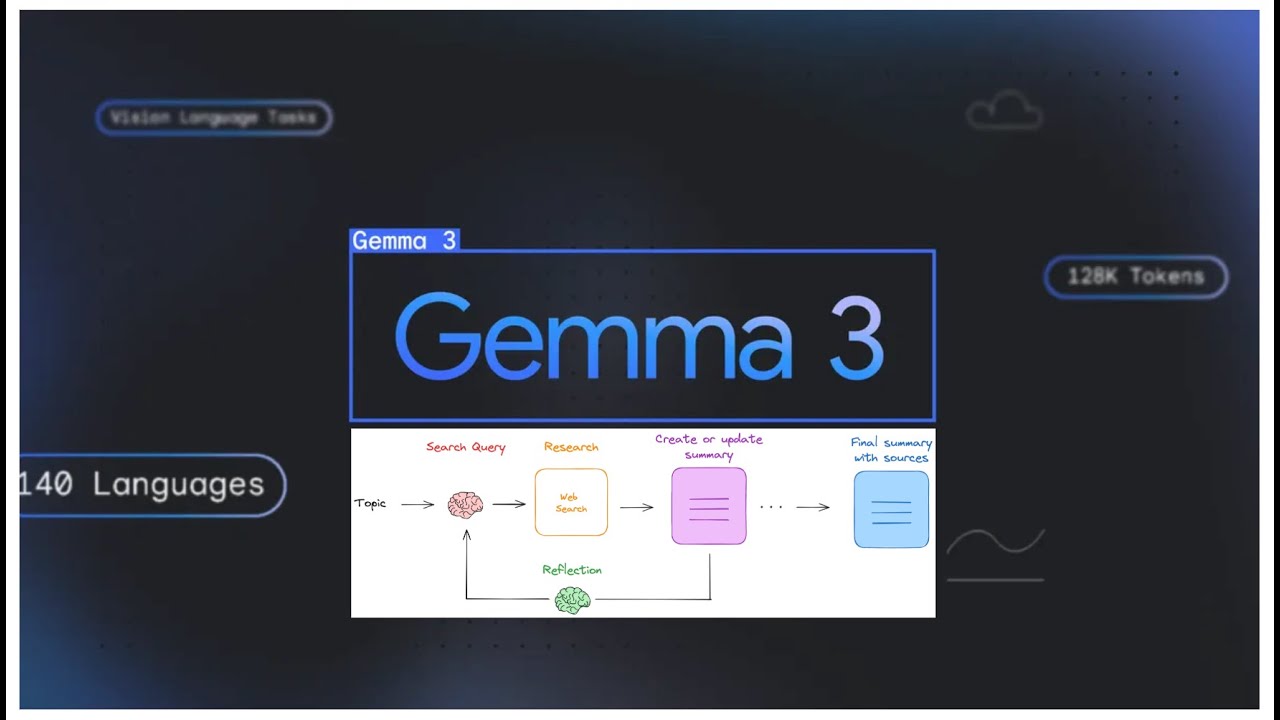

Ollama supports many models and systems. It tests LLMs like mistral 7b, llama2 7b, llama2 13b, and VLMs like llava 7b, llava 13b. The tool works on macOS, Linux, and WSL2 on Windows, so it is versatile for different setups.

One standout feature is its ability to measure the throughput of tokens per second from Ollama LLMs on different systems. This helps evaluate the text output speed and overall performance of the models.

Benefits

With Ollama, users enjoy several benefits. The tool allows for local processing, which boosts data security and privacy. This is important for those handling sensitive information. Ollama is also open source, making it cost effective and customizable.

The tool works with various systems, including low cost options like the Raspberry Pi 5. This makes it accessible to many users. Whether you are a professional or an enthusiast, Ollama lets you run LLMs and VLMs on your preferred hardware.

Use Cases

Ollama is great for data engineers who need to run generative AI models locally. It is also good for organizations that care about data privacy and security. The tool''s ability to measure performance on different systems makes it valuable for optimizing AI model use.

For those comparing models, Ollama gives insights into the performance of different LLMs and VLMs. This helps users choose the best models for their needs.

Cost/Price

The cost of using Ollama depends on the system and models used. For example, running a 7B model needs at least 8GB of RAM, while a 13B model needs 16GB of RAM. The Apple Mac mini M1 and Cloud VMs with GPUs work well but may cost more.

Funding

No funding details are given for the Ollama LLM Throughput Benchmark tool.

Reviews/Testimonials

Users find Ollama valuable for benchmarking local LLMs and VLMs. They like its open source nature and compatibility with various systems. However, the Raspberry Pi 5, while cost effective, may not be practical for LLM tasks due to its slow speed. The Apple Mac mini M1 and Cloud VMs with GPUs are better for performance.

Comments

Please log in to post a comment.