MiniCPM 4.0

MiniCPM 4.0 is a set of open-source AI models. They are made to work well on devices like smartphones and other edge devices. These models are fast and efficient. They are great for tasks that need quick processing without using too much power. The MiniCPM 4.0 family has two main models. They are the 8B Lightning Sparse Edition and the 0.5B version. Both models are built to be fast and practical. They offer big improvements in speed and efficiency.

Benefits

MiniCPM 4.0 has several key advantages. It can process information much faster. In some cases, it can be up to 220 times faster. In regular use, it can be five times faster. This is possible thanks to advanced technologies. They switch between different processing methods based on the length of the text. This ensures that long texts are handled quickly and efficiently. It also reduces the amount of storage needed. The models are designed to be small but powerful. The 0.5B version achieves double the performance with half the parameter size. It also has a much lower training cost. The 8B sparse version matches and even surpasses similar models. It is a leader in the field of on-device AI.

Use Cases

MiniCPM 4.0 can be used in many real-world applications. Its efficiency and speed make it ideal for tasks that require quick processing. These tasks include generating surveys and using specialized tools. The models have been adapted to work with mainstream chips from companies like Intel, Qualcomm, MTK, and Huawei Ascend. They have also been deployed on multiple open-source frameworks. This makes them versatile and widely applicable. The team behind MiniCPM 4.0 has also created a comprehensive ecosystem around these models. This includes highly compressed versions, specialized agent models, and an efficient CUDA inference framework.

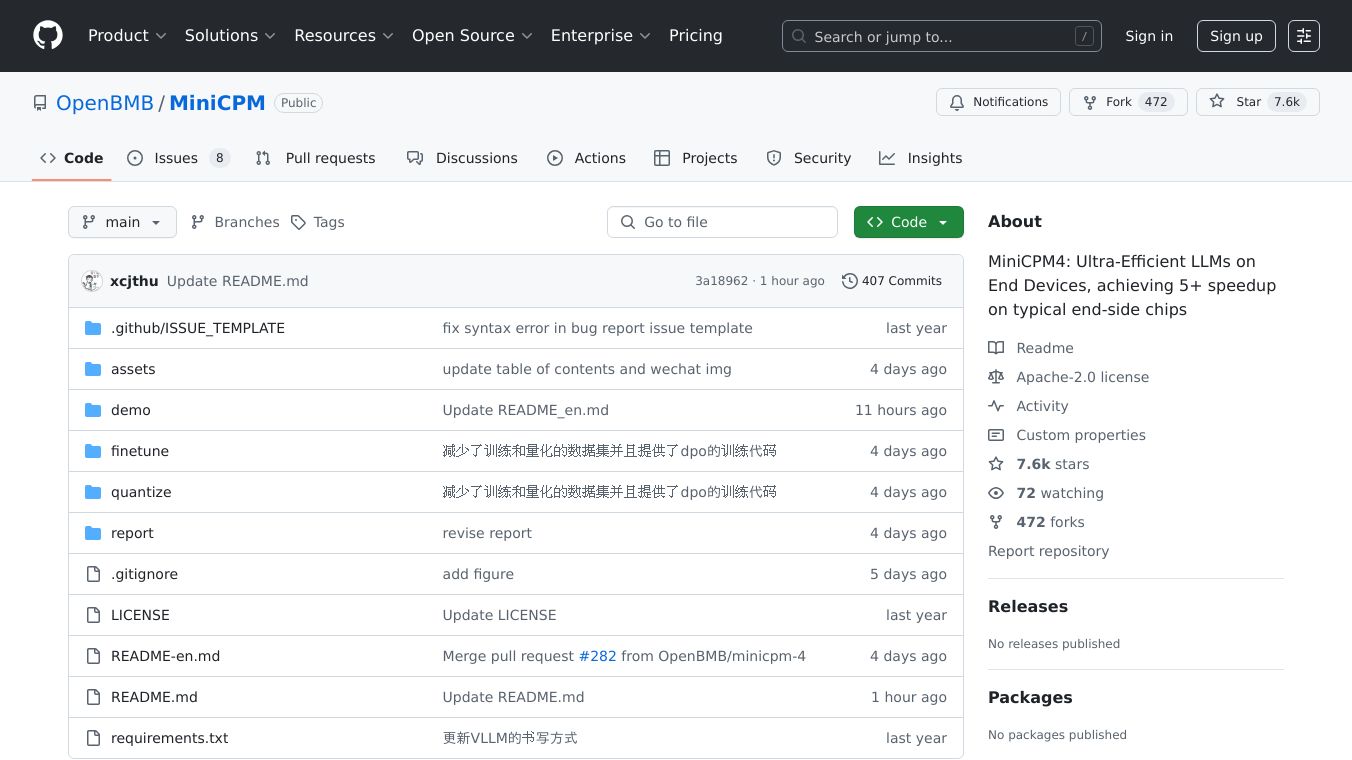

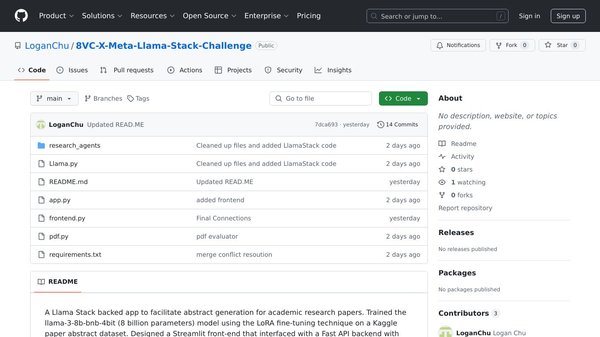

For more information, you can visit the model collection at https://www.modelscope.cn/collections/MiniCPM-4-ec015560e8c84d and the GitHub repository at https://github.com/openbmb/minicpm.

Comments

Please log in to post a comment.