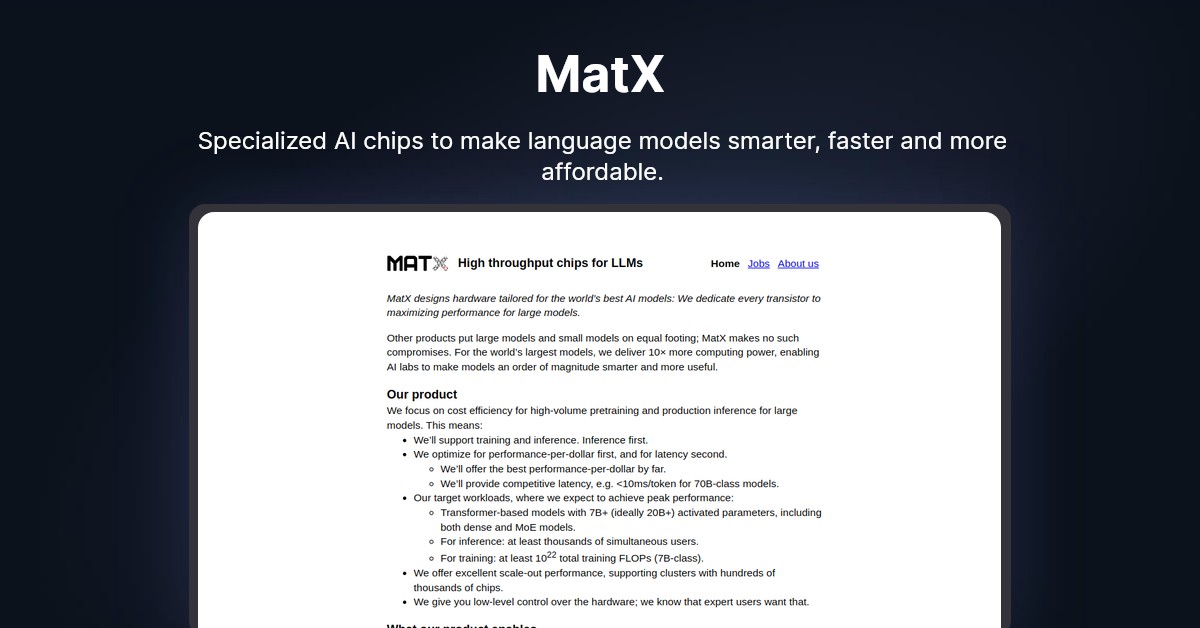

MatX

MatX is at the forefront of AI hardware innovation, dedicated to enhancing the capabilities of Language Model Models (LLMs) through specialized chips designed for Artificial General Intelligence (AGI). By focusing on making AI faster, better, and more affordable, MatX has developed powerful hardware tailored for LLMs, distinguishing itself from traditional GPUs that serve a broader range of machine learning models. This specialized approach ensures more efficient and streamlined hardware and software, promising a significant advancement in AI technology.

The team behind MatX comprises industry experts with extensive experience in ASIC design, compilers, and high-performance software development, including key contributors to innovative technologies at Google. This wealth of expertise, combined with strong support from leading LLM companies and substantial investments from top-tier entities, positions MatX as a formidable player in the AI field.

MatX chips are meticulously engineered for LLMs, offering high throughput and a tenfold increase in computing power for large AI models. This enhanced capability allows AI labs to develop models that are significantly smarter and more useful. The primary focus is on cost efficiency for high-volume pretraining and production inference for large models, optimizing for performance-per-dollar and competitive latency.

Highlights:

- Specialized chips for LLMs

- Tenfold increase in computing power for large models

- Cost-efficient pretraining and inference

- Industry-leading experts in AI hardware

- Strong support from leading LLM companies

Key Features:

- Unmatched performance-per-dollar

- Competitive latency

- Optimal scale-out performance

- Low-level hardware control

- Support for both training and inference

Benefits:

- Reduced cost of AI model training and inference

- Fast response times for AI applications

- Facilitation of large-scale AI projects

- Customizable hardware optimizations

- Accelerated AI model development

Use Cases:

- Accelerated AI model development

- Startup innovation

- Large-scale AI projects

- Support for AI researchers

- Resource-efficient AI for large enterprises

Comments

Please log in to post a comment.