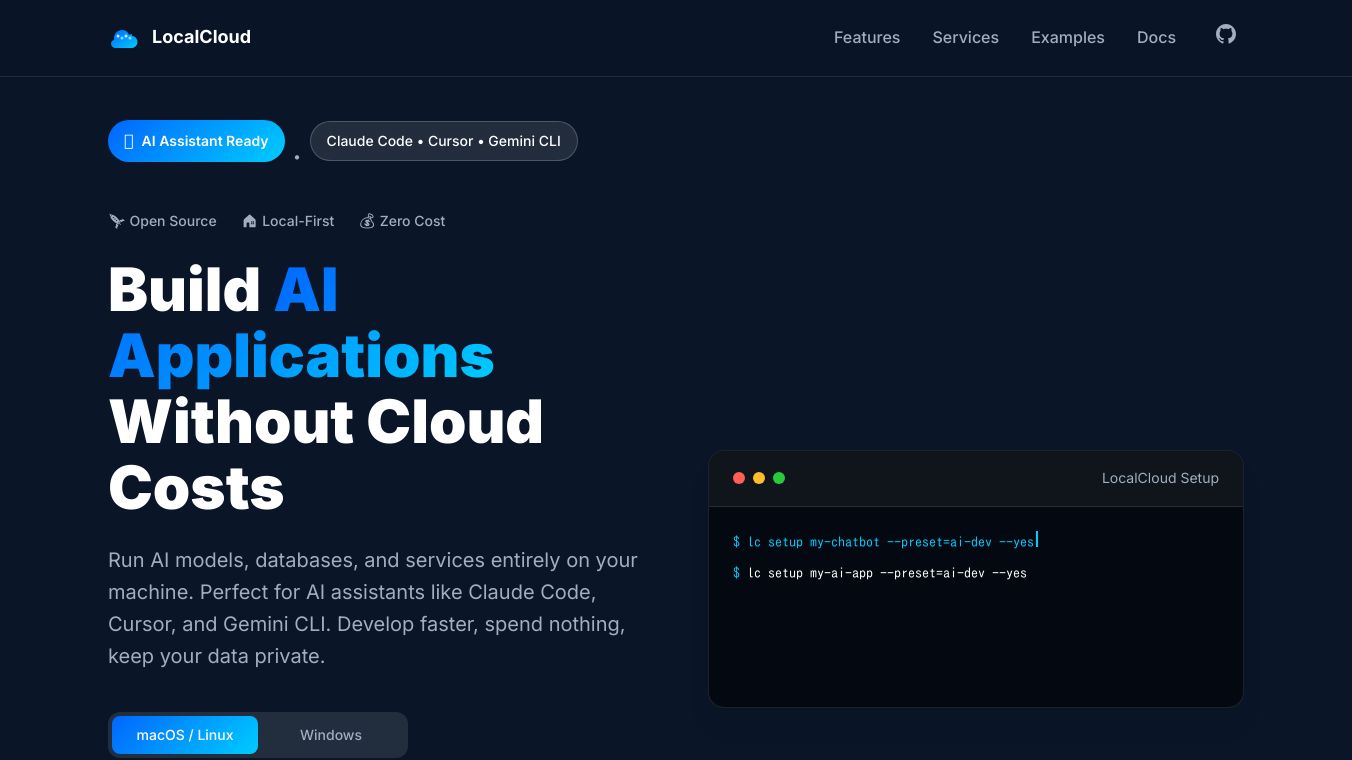

LocalCloud: Complete AI Dev Platform

LocalCloud is an open-source, local-first platform designed to streamline the development of AI applications without incurring cloud costs. It allows developers to run AI models, databases, and services entirely on their machines, ensuring data privacy and eliminating infrastructure complexity. LocalCloud is particularly well-suited for AI assistants like Claude Code, Cursor, and Gemini CLI, enabling faster development cycles and seamless integration with existing codebases.

Benefits

LocalCloud offers several key advantages for developers:

- AI Assistant Ready: Non-interactive setup perfect for AI assistants like Claude Code, Cursor, and Gemini CLI.

- Zero Cloud Costs: Everything runs locally, eliminating API fees and usage limits.

- Complete Privacy: Your data never leaves your machine.

- Pre-built Templates: Production-ready backends for common AI use cases.

- Optimized Models: Carefully selected models that run on 4GB RAM.

- Developer Friendly: Simple CLI, clear errors, and extensible architecture.

- Docker-Based: Consistent environment across all platforms.

- Mobile Ready: Built-in tunnel support for demos anywhere.

- Export Tools: One-command migration to any cloud provider.

Use Cases

LocalCloud is versatile and caters to various development needs:

- Bootstrapped Startups: Build MVPs with zero infrastructure costs during early development.

- Privacy-First Enterprises: Run open-source AI models locally, keeping data in-house.

- Corporate Developers: Skip IT approval queues and get PostgreSQL and Redis running immediately.

- Demo Heroes: Tunnel your app to any device and present from iPhone to client's iPad instantly.

- Remote Teams: Share running environments with frontend developers without deployment hassles.

- Students & Learners: Master databases and AI without complex setup or cloud accounts.

- Testing Pipelines: Integrate AI and databases in CI without external dependencies.

- Prototype Speed: Spin up full-stack environments faster than booting a VM.

Installation

LocalCloud can be installed on macOS, Linux, and Windows with a single command:

For macOS/Linux:

$curl-fsSLhttps://localcloud.sh/install|bashFor Windows (PowerShell):

PS>iwr-usebhttps://localcloud.sh/install.ps1|iexSetup

After installation, you can set up a project with a simple command:

lcsetupmy-app--preset=ai-dev--yesThis command creates a project and opens an interactive wizard to configure services, select AI models, and set up ports and resources.

Services

LocalCloud provides a range of services that can be configured individually:

- AI/LLM: Ollama with selected models (default port: 11434)

- Database: PostgreSQL (optional pgvector extension) (default port: 5432)

- Document-oriented NoSQL database: MongoDB (default port: 27017)

- Cache: Redis for performance (default port: 6379)

- Queue: Redis for job processing (default port: 6380)

- Storage: MinIO (S3-compatible) (default ports: 9000/9001)

Future Enhancements

LocalCloud is continuously evolving with plans to introduce features like:

- Multi-Language SDKs

- Web Admin Panel

- Model Fine-tuning

- Team Collaboration

- Performance Optimization

- Enterprise Features

- Project Isolation

- Plugin System

- Alternative AI Providers

- Cloud Sync

- Mobile Development

- Kubernetes Integration

- IDE Extensions

Community and Contributions

LocalCloud welcomes contributions and feedback. Developers can engage through GitHub Discussions, GitHub Issues, or by emailing [email protected]. The project is licensed under Apache 2.0 and is built on a foundation of amazing open-source projects and communities.

For more detailed information, visit theLocalCloud GitHub repository.

Comments

Please log in to post a comment.