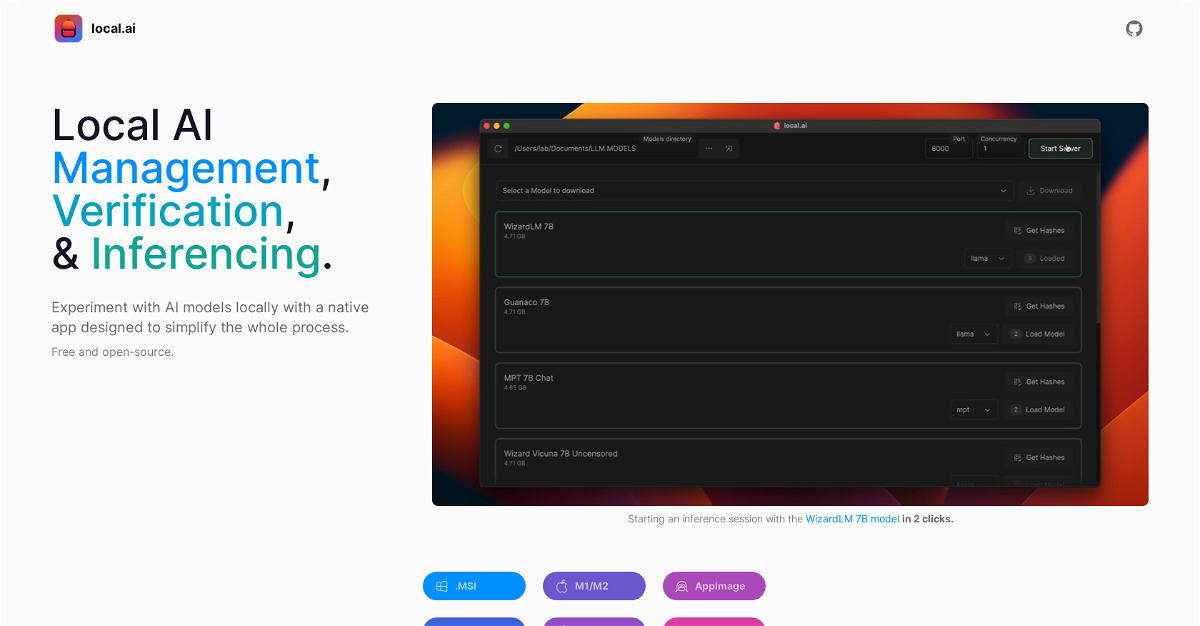

Localai

Local.ai is a free and open-source native app that empowers you to manage, verify, and experiment with AI models, all within the privacy of your own device. It simplifies the AI process and eliminates the need for a dedicated GPU, making it accessible to everyone.

Highlights

- Effortless AI Experimentation: Perform AI experiments without the need for technical setup or a GPU.

- Powerful Native App: Experience a fast and efficient app built with a Rust backend, making it memory-efficient and compact.

- Centralized Model Management: Keep all your AI models organized in one convenient location.

- Robust Verification: Ensure the integrity of your downloaded models with a comprehensive digest verification feature.

Key Features

- CPU Inferencing: Perform AI inference on your computer's CPU, making it adaptable to various computing environments.

- GGML Quantization: Choose from multiple quantization options (Q40, Q50, Q8_0, and F16) for optimal performance.

- Resumable Downloader: Download models efficiently with a pause/resume capability and concurrent downloads.

- Directory Agnostic: Organize your models freely; Local.ai supports any directory structure.

- Inferencing Server: Easily start a local streaming server for AI inferencing with just a few clicks.

- Model Integrity: Verify the integrity of your models using Blake2b and SHA256 digests.

- Open Source: Contribute to the development of Local.ai and access the source code freely.

Comments

Please log in to post a comment.