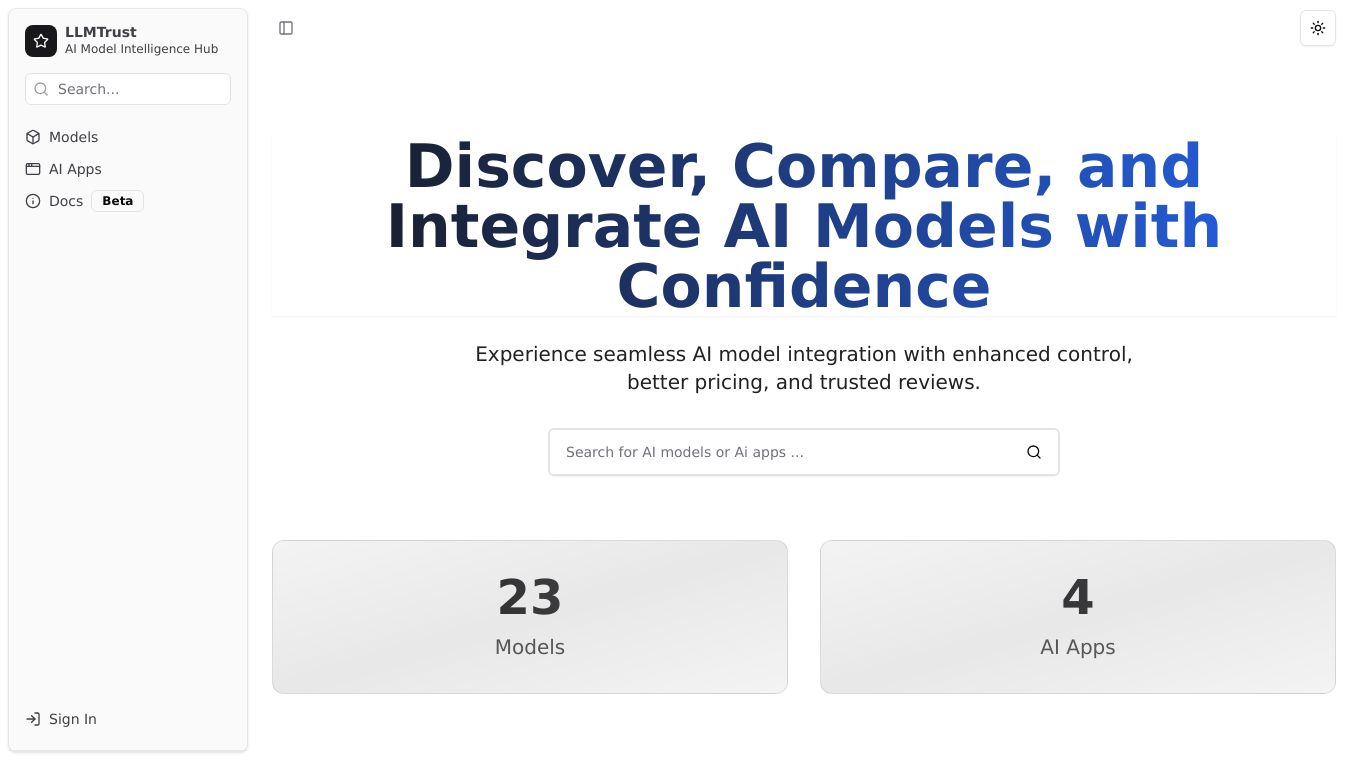

LLMTrust

Meet LLMTrust, a special project all about checking and improving the trustworthiness of Large Language Models (LLMs). With models like ChatGPT becoming popular, making sure they are reliable is very important.

LLMTrust looks closely at eight important areas to give a full review of these models.

Key Features

LLMTrust checks eight important parts of trustworthiness in LLMs:

- Truthfulness LLMTrust makes sure the model gives correct and true information.

- Safety It works to stop harmful or unsafe answers.

- Fairness It helps make sure the model is fair and treats everyone equally.

- Robustness It checks that the model works well in different situations.

- Privacy It protects sensitive and personal information.

- Machine Ethics It follows rules that are good and right.

- Transparency It is open about how the model works and makes choices.

- Accountability It takes responsibility for what the model does.

Benefits

LLMTrust checks 16 popular LLMs using over 30 sets of information. This includes both special models and ones that anyone can use. It gives a good look at how trustworthy they are.

Use Cases

- Proprietary LLMs These models often do better than open-source ones in being trustworthy. Examples are GPT-4 and ERNIE, which are great at putting things in categories and understanding language.

- Open-Source LLMs Models like Llama2 do well in certain tasks. This shows that open-source models can do well too.

Cost/Price

The article does not talk about the cost or price of LLMTrust.

Funding

The article does not talk about how LLMTrust is funded.

Reviews/Testimonials

People say that while models like GPT-4 and Llama2 have trouble with some common sense tasks, they still do well in other areas. Open-source models are not as safe as special ones but show promise in certain tasks. More study and teamwork are needed to make LLMs better and more ethical.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.