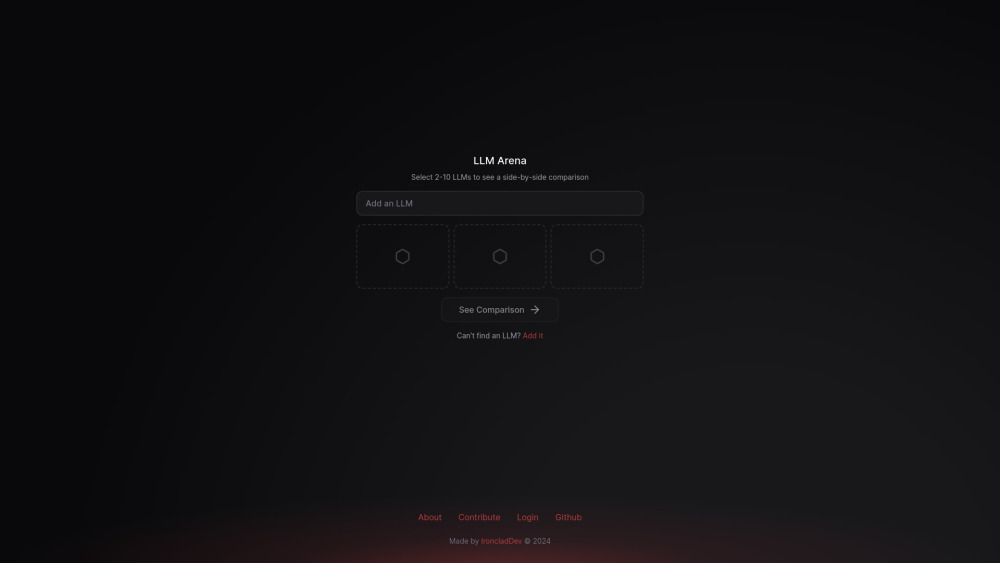

LLM Arena

LLM Arena is a user-friendly tool designed to facilitate the evaluation and comparison of different large language models. It provides a level playing field where various LLMs can compete and showcase their capabilities. Originally conceived by Amjad Masad, CEO of Replit, LLM Arena was developed over six months to create an accessible platform for comparing LLMs side-by-side. The platform is open to the community, allowing users to contribute new models and participate in evaluations.

Highlights:

- Open-source platform for comparing LLMs

- Crowdsourced evaluation system

- Elo rating system to rank models

- Community contribution model

Key Features:

- Side-by-side LLM comparison

- Crowdsourced evaluation

- Elo rating system

- Open contribution model

Benefits:

- Standardized platform for LLM evaluation

- Community participation and contribution

- Real-world testing scenarios

- Transparent model comparison

Use Cases:

- AI research benchmarking

- LLM selection for applications

- Educational tool

- Product comparison

Comments

Please log in to post a comment.