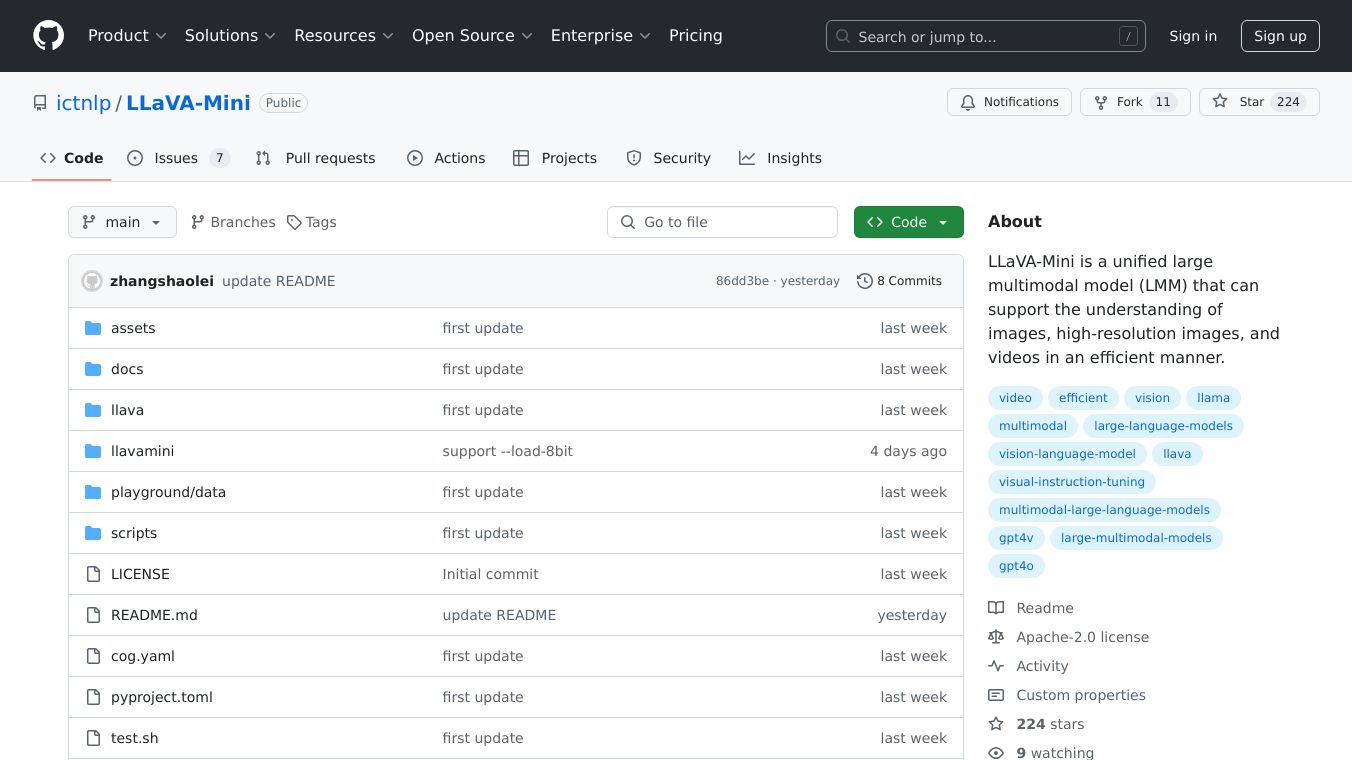

LLaVA-Mini

LLaVA-Mini is a powerful tool that understands images, high-resolution images, and videos efficiently.

It uses a unique approach by representing each image with just one vision token.

This makes it much faster and lighter than other models while still performing just as well.

Key Features

LLaVA-Mini stands out with its efficient architecture.

It compresses vision tokens to reduce computational overhead and inference latency, making it quick and lightweight.

The model uses a technique called modality prefusion, which combines visual information into text tokens before processing them.

This allows for extreme compression of vision tokens, maintaining high performance with minimal resources.

One of the most impressive features is its performance.

LLaVA-Mini matches the capabilities of LLaVA-v1.5 but uses only one vision token instead of 576.

This results in a compression rate of 0.17%, making it incredibly efficient.

Benefits

The efficiency gains of LLaVA-Mini are significant.

It reduces computational load by 77%, providing low-latency responses within 40 milliseconds.

This makes it ideal for real-time applications.

Additionally, it requires only 0.6 MB of VRAM per image, allowing it to process over 10,000 frames of video on a GPU with 24GB of memory.

This memory efficiency is crucial for handling high-resolution images and long videos, making it perfect for real-time multimodal interactions.

Use Cases

LLaVA-Mini has proven its capabilities across various benchmarks.

It performs comparably to LLaVA-v1.5 on 11 image-based benchmarks, including VQA-v2, GQA, and MMBench.

For video-based tasks, it outperforms existing video LMMs on 7 benchmarks, showcasing its ability to handle long videos and complex video understanding tasks.

Looking ahead, there are plans to optimize the modality prefusion module to enhance performance and reduce latency further.

There is also interest in extending LLaVA-Mini to other modalities like audio or 3D point clouds.

Additionally, researchers are exploring quantization and other model compression techniques to deploy LLaVA-Mini on mobile and edge devices, making it accessible in resource-constrained environments.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.