LiteLLM

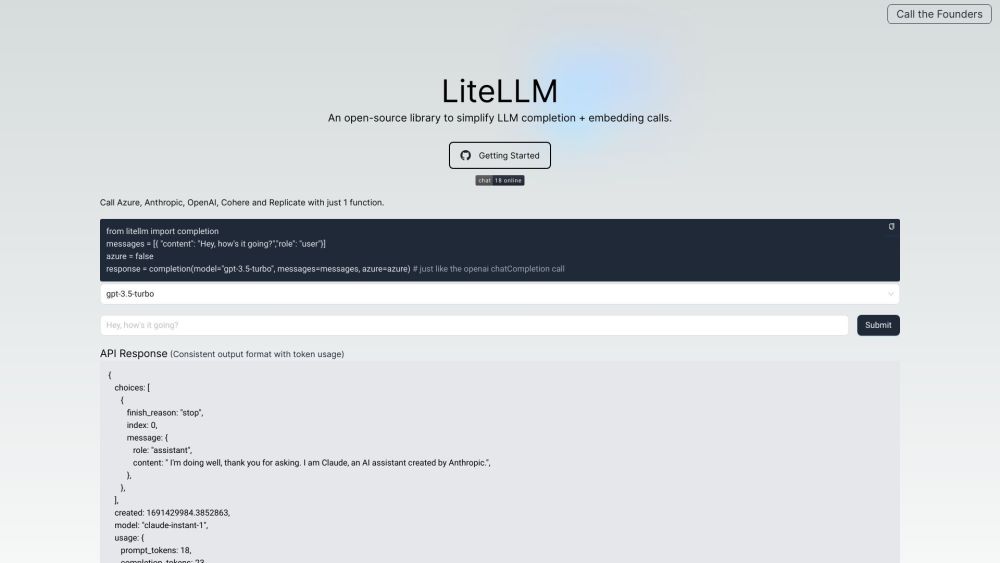

LiteLLM is a powerful tool designed to simplify the integration and management of large language models (LLMs) in AI applications. It serves as a universal interface for accessing LLMs from multiple providers like OpenAI, Azure, Anthropic, Cohere, and many others. LiteLLM abstracts away the complexities of dealing with different APIs, allowing developers to interact with diverse models using a consistent OpenAI-compatible format. This open-source solution offers both a Python library for direct integration and a proxy server for managing authentication, load balancing, and spend tracking across multiple LLM services.

LiteLLM is a unified API and proxy server that simplifies integration with over 100 large language models (LLMs) from various providers like OpenAI, Azure, Anthropic, and more. It offers features such as authentication management, load balancing, spend tracking, and error handling, all using a standardized OpenAI-compatible format. LiteLLM enables developers to easily switch between or combine different LLM providers while maintaining consistent code.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.