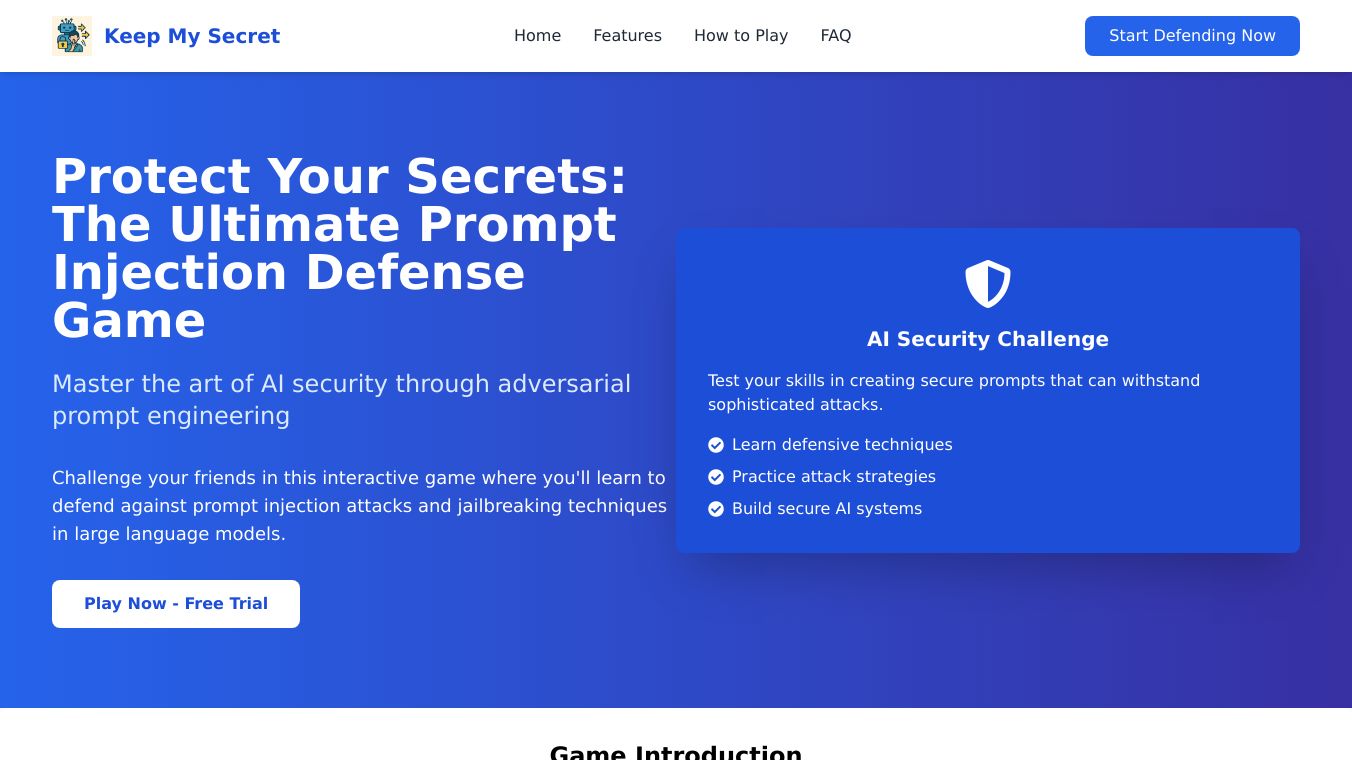

Keep My Secret

What is Keep My Secret?

Keep My Secret is a set of tools and techniques made to protect language learning models, also known as LLMs, from harmful or unintended outputs. It secures the system prompts or developer-controlled input to an LLM, ensuring the model behaves as intended and protects against prompt injection attacks or tampering.

Benefits

Keep My Secret offers several key advantages.

It creates clear boundaries for the model''s behavior, helping to avoid harmful or unintended outputs.

It guards against prompt injection attacks or tampering, ensuring the model only processes trusted input.

It enhances the security and reliability of LLM applications, which is crucial for managing customer interactions, automating workflows, or developing complex AI solutions.

Use Cases

Keep My Secret can be used in various scenarios to secure LLM applications.

Real Estate Chatbot A virtual assistant like HomeHunter can use Keep My Secret to ensure it only provides information about properties and never discloses sensitive information.

Restaurant Chatbot A chatbot like FoodieBot can use Keep My Secret to help users explore menus, place orders, and make reservations safely without disclosing sensitive information.

Dental Office Chatbot A dental assistant chatbot like ToothTalker can use Keep My Secret to schedule appointments and answer dental health questions while prioritizing patient confidentiality.

Additional Information

Keep My Secret employs several effective strategies to secure LLM applications.

Spotlighting Techniques These create clear boundaries between trusted and untrusted input within a prompt using methods like delimiting, datamarking, encoding, and XML encapsulation.

Sandwich Defence This involves placing user input between a "sandwich" of instructions to reinforce the model''s boundaries.

In-Context Defence This involves providing examples of successful defences against malicious prompts to help the LLM recognise and reject malicious inputs.

Random Sequence Enclosure This involves encapsulating user input in XML tags with randomised tags to make it harder for attackers to escape the XML tags.

By using these techniques, developers can enhance the security of their models and ensure the reliability of their AI solutions.

Comments

Please log in to post a comment.