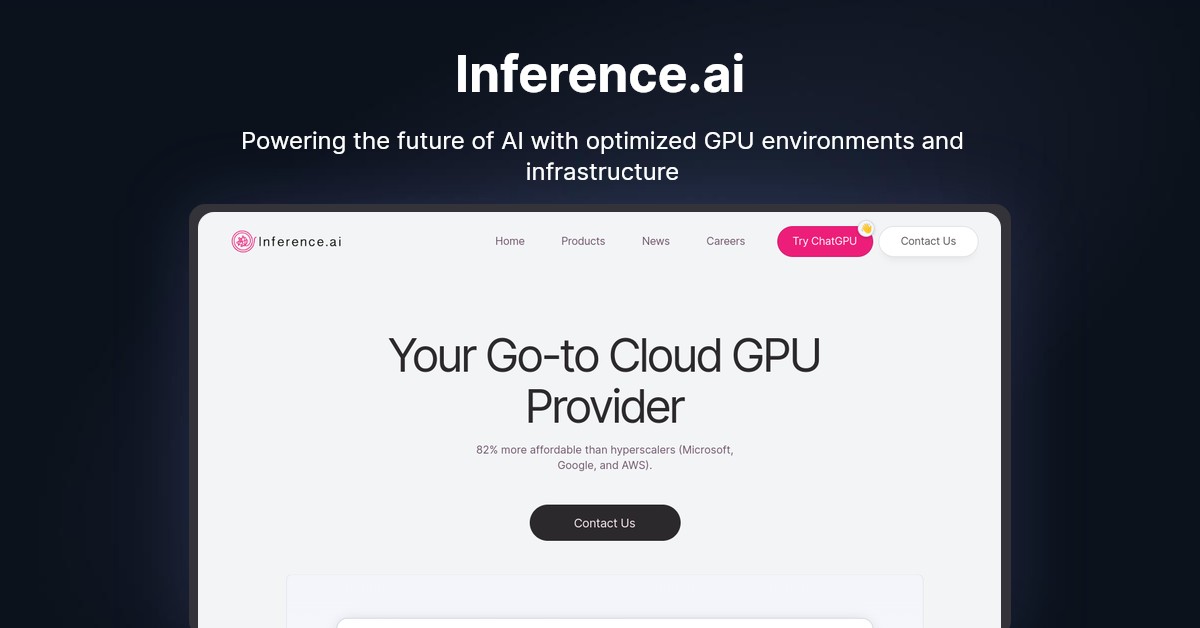

Inference.ai

Inference.ai is revolutionizing the AI and ML landscape by providing a robust infrastructure-as-a-service (IaaS) platform tailored for developers and researchers. With an extensive inventory of GPUs, including the latest models like H100 SXM, A100 40GB, and RTX A4000, Inference.ai ensures that your projects are equipped with the best hardware available. The platform offers global access through Tier 2+ data centers, ensuring high uptime and low latency from over 200 locations worldwide. Additionally, Inference.ai optimizes cost structures, allowing users to save up to 80% on GPU expenses compared to other providers. Beyond hardware, the service includes comprehensive solutions such as cloud GPU services, bare metal GPU, distributed object storage, custom deployments, and more. Each GPU instance comes with 5TB of free object storage, providing ample space for data management.

Inference.ai's services are versatile, supporting a wide range of applications from AI research and development to data analysis and machine learning model training. The platform is designed to cater to a diverse audience, from individual developers and startups to large enterprises, making it an invaluable resource for anyone involved in AI and ML.

Comments

Please log in to post a comment.