Imarena.AI

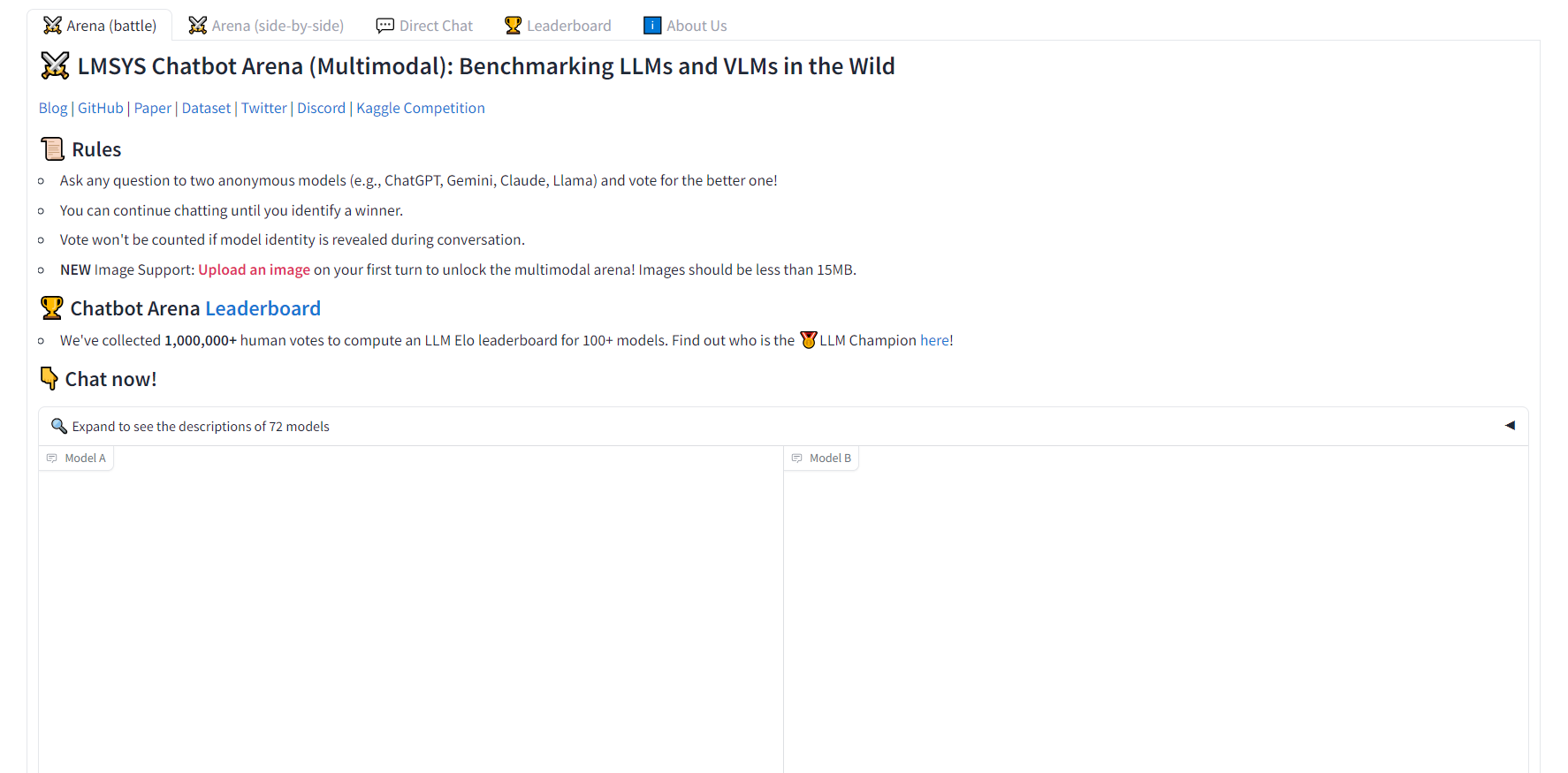

Imarena.AI, formerly Chatbot Arena, is a public platform that empowers users to evaluate and compare large language models (LLMs) through anonymous, crowdsourced battles. Launched in 2023 by UC Berkeley’s SkyLab and rebranded in 2025 with $100 million in funding, it has collected over 3.5 million votes from users worldwide. By submitting prompts and voting on AI responses, users help rank models like GPT-4o, Claude 3.7, and Gemini, shaping the future of AI. The platform is free, open-source, and supports text, image, and vision tasks, making it a go-to for anyone interested in AI development or exploration.

Highlights

- Crowdsourced AI Testing: Over 3.5 million votes power transparent, human-driven AI rankings.

- Free to Use: No account or payment needed to start comparing models.

- Trusted Models: Features top AIs like GPT-4o, Claude, Gemini, and DeepSeek.

- Open-Source Datasets: Shares the world’s largest repository of human preferences for AI research.

- Global Impact: Used by major labs and small teams to test and refine models.

Key Features

Imarena.AI offers a range of tools to make AI testing engaging and impactful:

- Battle Mode: Submit a prompt, get responses from two anonymous AI models, and vote for the better one.

- Side-by-Side Comparison: Select specific models (e.g., GPT-4o vs. Claude) to compare directly.

- Direct Chat: Interact with a single AI model for in-depth testing or exploration.

- Leaderboard: View real-time rankings based on Elo ratings from user votes.

- Multi-Task Support: Test models on text, image generation, or vision tasks.

- Blind Voting: Model identities are hidden during voting to ensure fairness.

- Open Datasets: Access human preference data for research and model improvement.

Feature

- Battle Mode: Compare two anonymous AI responses and vote for the best.

- Side-by-Side Comparison: Test specific models head-to-head with your prompt.

- Direct Chat: Chat with one model for detailed testing or Q&A.

- Leaderboard: See real-time AI rankings based on user votes.

- Multi-Task Support: Evaluate models on text, images, or vision tasks.

- Blind Voting: Ensures unbiased feedback by hiding model identities.

- Open Datasets: Free access to human preference data for AI research.

Benefits

Imarena.AI offers clear advantages for users and the AI community:

- Fun and Engaging: Battle mode makes testing AI feel like a game.

- Free Access: No cost to use, making it open to everyone.

- Unbiased Results: Blind voting ensures fair, objective rankings.

- Drives AI Progress: User votes help developers improve models.

- Educational Value: Learn about AI capabilities through hands-on testing.

- Community Impact: Join a global effort to shape AI’s future.

Use Cases

Imarena.AI’s versatility makes it useful for a variety of users:

- AI Enthusiasts: Compare models like GPT-4o and Claude to see which performs best.

- Developers: Test pre-release models and use open datasets for research.

- Students and Educators: Explore AI capabilities for learning or teaching.

- Content Creators: Generate creative content and compare model outputs.

- Businesses: Evaluate AI models for potential integration into workflows.

Scenario

- AI Enthusiasts: Test which model writes better poems or answers trivia.

- Developers: Use datasets to train or evaluate new AI models.

- Students/Educators: Learn about AI by comparing responses to academic prompts.

- Content Creators: Generate story ideas and pick the best model for creative tasks.

- Businesses: Test AI models for customer support or marketing content.

Vibes

Imarena.AI feels like a lively arena where AI models compete for your vote. It’s engaging, interactive, and surprisingly addictive, with a community-driven spirit that makes you feel part of something big. The platform’s clean design and playful battle mode create a sense of excitement, while its focus on transparency and fairness adds a layer of trust. Whether you’re a tech nerd or just curious, Imarena.AI makes exploring AI fun and meaningful.

How It Works

Getting started with Imarena.AI is easy:

- Visit Imarena.AI and select a mode (Battle, Side-by-Side, or Direct Chat).

- Enter a prompt—text, image, or vision-related.

- In Battle mode, review two anonymous responses and vote for the better one.

- In Side-by-Side, pick two models to compare directly.

- In Direct Chat, interact with one model for deeper exploration.

- Check the leaderboard to see how models rank based on community votes.

Why Choose Imarena.AI?

Imarena.AI stands out for its unique approach to AI evaluation. It’s free, fun, and impactful, letting users directly influence AI development. The blind voting system ensures fairness, while open datasets support research and innovation. With support for multiple tasks and a global community, it’s a powerful tool for anyone curious about AI or looking to test its capabilities. Its academic roots and major funding add credibility, making it a trusted platform for exploring the future of AI.

Getting Started

Ready to test AI models and shape their future? Visit Imarena.AI to start battling, comparing, or chatting with top AI models for free. Explore the Leaderboard to see rankings, or dive into the Hugging Face Space for community insights. Join the global effort with over 3.5 million votes and see how your feedback makes a difference.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.