Humanizing AI

Humanizing AI is a concept that focuses on designing and developing artificial intelligence systems to align with human values, needs, and expectations. It aims to make AI more relatable, understandable, and ethical, ensuring that these systems interact with humans in a natural, intuitive, and beneficial way. This approach involves imbuing AI with emotional intelligence, empathy, and effective communication skills, while also considering cultural and societal contexts. The goal is to create AI that is not only intelligent but also responsible and beneficial to humanity.

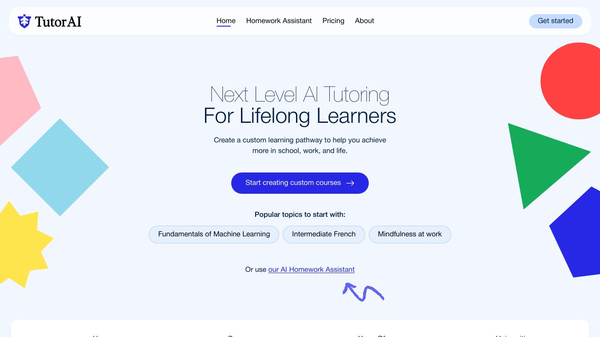

Humanizing AI offers several key benefits. It enhances user experience by making interactions with AI more pleasant, efficient, and satisfying. This can lead to increased trust and loyalty among users. In customer service, humanized AI can power chatbots and virtual assistants that resolve issues with patience and understanding, leading to increased customer satisfaction and reduced operational costs. In healthcare, humanized AI can assist doctors in diagnosing illnesses and creating treatment plans, while also providing patients with emotional support and personalized care. In education, humanized AI can personalize learning experiences, tailoring content and pace to individual student needs, fostering a more engaging and effective learning environment.

Humanizing AI can be applied in various industries and scenarios. In customer service, it can improve interactions with chatbots and virtual assistants. In healthcare, it can assist in diagnosing illnesses, creating treatment plans, and providing emotional support to patients. In education, it can personalize learning experiences for students. Several companies are already demonstrating successful implementations of humanized AI, such as using AI-powered sentiment analysis to gauge customer emotions during interactions and developing AI companions for the elderly.

As AI systems become increasingly sophisticated and capable of mimicking human traits, it is crucial to address the ethical considerations that arise. Giving AI human-like qualities blurs the lines between machine and person, potentially leading to confusion about their actual capabilities and intentions. This raises fundamental questions about how we should treat AI and what responsibilities we have towards them. One of the significant risks associated with human-like AI is the potential for deception and manipulation. If an AI can convincingly mimic human emotions and behavior, it could be used to exploit individuals or influence their decisions in unethical ways. This is particularly concerning in areas like customer service, mental health support, or even political campaigning. Furthermore, we must be vigilant about bias amplification. AI systems are trained on vast amounts of data, and if that data reflects existing societal biases, the AI will likely perpetuate and even amplify those biases. This can lead to unfair or discriminatory outcomes, especially for marginalized groups.

To mitigate these risks, transparency is paramount. We need to understand how AI systems work, how they make decisions, and what data they are trained on. This requires clear documentation, open-source development practices, and ongoing monitoring. Equally important is accountability. Developers and deployers of AI systems must be held responsible for the ethical implications of their creations. This may involve establishing ethical guidelines, implementing auditing mechanisms, and creating avenues for redress when harm occurs. Ultimately, ensuring fairness in AI development requires a multi-faceted approach that involves technical safeguards, ethical frameworks, and ongoing dialogue among stakeholders.

The integration of humans within AI systems, often referred to as "Humans in the Loop" (HITL), underscores the critical role of humans in ensuring AI remains aligned with human values and needs. Far from being automated replacements, humans are essential for training, refining, and governing AI behavior. Within AI development, HITL manifests in several ways. Humans provide labeled data, a foundational element for supervised learning. They also validate AI outputs, correcting errors and biases that algorithms might perpetuate. This human feedback is crucial for iterative improvement, guiding AI towards more accurate and reliable performance. Human oversight becomes particularly important in sensitive domains like healthcare and criminal justice. Algorithms can inadvertently reflect existing societal biases, leading to unfair or discriminatory outcomes. Human reviewers can identify and mitigate these biases, ensuring AI systems operate ethically and equitably.

The future of AI hinges on robust collaboration between humans and machines. This partnership extends beyond development to encompass deployment and monitoring. Humans can leverage AI to augment their own abilities, making better decisions and solving complex problems more efficiently. Simultaneously, AI benefits from human judgment, contextual awareness, and creativity. Moreover, HITL addresses emerging challenges in areas such as education and cybersecurity. In education, human educators can utilize AI-powered tools to personalize learning experiences while retaining control over pedagogical strategies. In cybersecurity, human analysts can work alongside AI systems to detect and respond to evolving threats, combining human intuition with AI's analytical power.

Making AI feel more human is a fascinating and increasingly important area of development. We're moving beyond simple task completion to a desire for AI interactions that are engaging, relatable, and even enjoyable. But how do we bridge the gap between cold, calculating algorithms and something that feels genuinely human? One of the most powerful tools at our disposal is prompt engineering. By carefully crafting the initial input, we can significantly influence the AI's output. Think of it as providing the AI with a detailed brief; the more specific and nuanced the prompt, the better the chance of eliciting a natural and engaging response. For example, instead of simply asking an AI to "write a story," you could specify the tone, setting, character archetypes, and even the intended audience. Natural language processing (NLP) is crucial here. Advancements in NLP allow AI to better understand the nuances of human language, including idioms, sarcasm, and emotional cues. This understanding then allows the AI to generate responses that are more contextually appropriate and human-like. Another key aspect is injecting personality into the AI. This goes beyond simply avoiding robotic phrasing; it involves giving the AI a consistent persona with defined traits, quirks, and even a backstory. Consider how different the experience would be interacting with an AI assistant that is programmed with a cheerful, optimistic style versus one that is more reserved and professional. Of course, as AI becomes more human-like, crucial considerations emerge, such as education on recognizing AI versus human interaction and robust cybersecurity measures to prevent malicious impersonation.

Human-centered AI is an emerging field focused on designing AI systems that prioritize human needs, values, and well-being. Current human-centered AI research explores methods to make AI more transparent, accountable, and fair, ensuring that AI systems augment human capabilities rather than replace them. This interdisciplinary field draws insights from computer science, social sciences, and humanities to address the complex interactions between humans and AI. Leading institutions like Stanford HAI (Human-Centered AI Institute) are at the forefront of this movement, conducting research on the ethical, social, and economic implications of AI. Similarly, the AI Now Institute at New York University examines the societal impact of AI, with a focus on issues like bias, inequality, and power. The future of AI hinges on our ability to develop systems that are aligned with human values and contribute positively to society. Humanizing AI involves creating AI that is not only intelligent but also empathetic, adaptable, and capable of collaborating effectively with humans. As AI becomes increasingly integrated into our lives, human-centered AI research and design principles will be essential for ensuring that these technologies benefit everyone.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.