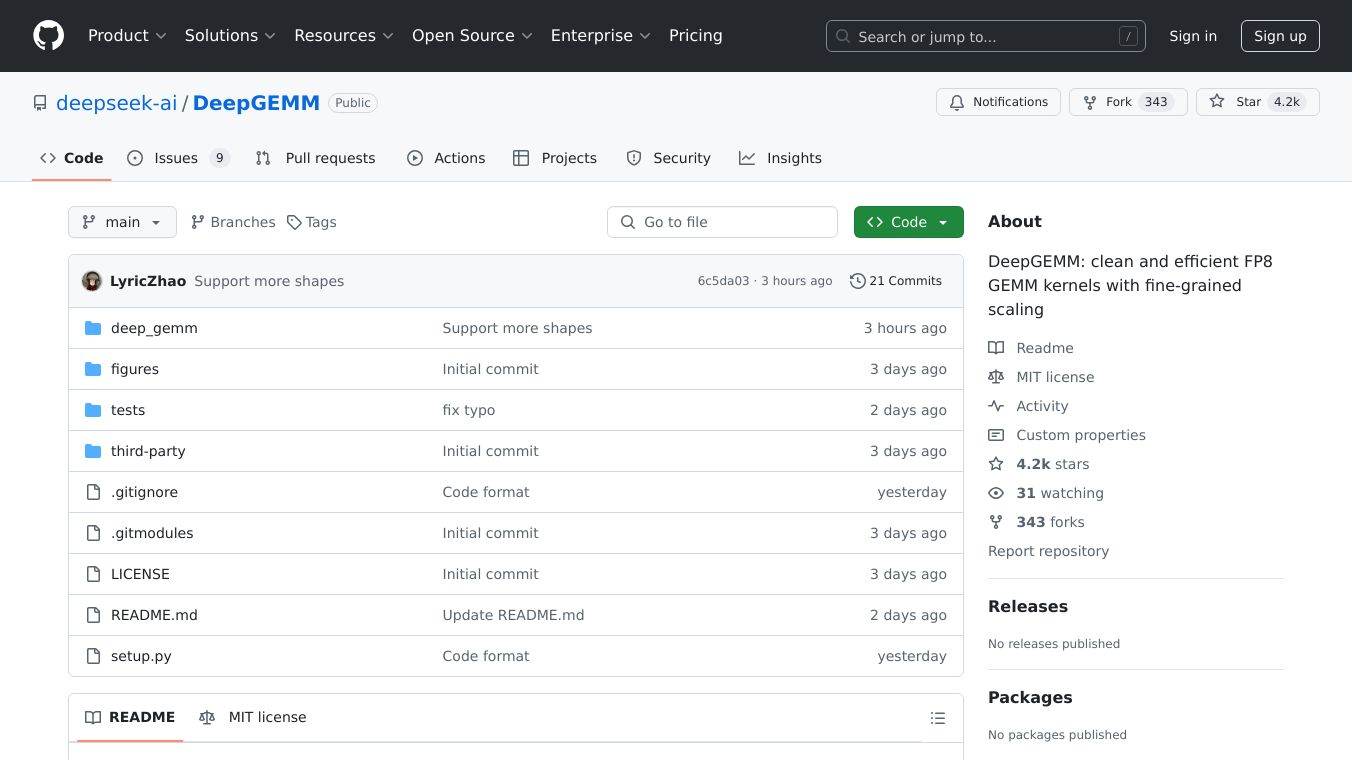

DeepGEMM

DeepGEMM is a powerful tool designed to make matrix multiplications, a crucial part of AI models, faster and more efficient. Created by DeepSeek AI, it is perfect for NVIDIA Hopper tensor cores and supports both dense and Mixture of Experts (MoE) models. This makes it ideal for training and using DeepSeek''s V3 and R1 models.

Key Features

DeepGEMM has several notable features:

Hopper Architecture Optimization: It is built to work with NVIDIA Hopper tensor cores and uses advanced techniques to handle FP8 tensor core accumulation challenges.

Blazing Fast Performance: It achieves over 1350+ FP8 TFLOPS on Hopper GPUs, making it super fast and efficient for complex models.

Compact and Powerful: With just 300 lines of core logic, it is easy for developers and researchers to use.

Just In Time (JIT) Compiled: Written in CUDA, it compiles all kernels at runtime using a lightweight JIT module, so there is no need for compilation during installation.

Supports Dense and MoE Models: It works with both standard dense models and specialized Mixture of Experts (MoE) models.

Benefits

DeepGEMM offers several benefits for AI development:

Faster Training Times: Optimized for FP8 operations, it speeds up the training of large AI models.

MoE Model Deployment: Supports MoE grouped GEMMs, making it great for complex MoE models in real-world applications.

Efficient Resource Usage: Maximizes the return on investment in GPU infrastructure with its optimized performance.

Developer-Friendly Design: Easy to integrate into existing projects with its clean and understandable codebase.

No Hassle Deployment: JIT compilation makes it easy to set up and run in different environments.

Use Cases

DeepGEMM is versatile and can be used in various scenarios:

Normal GEMMs for Dense Models: Use it for basic FP8 matrix multiplications.

Grouped GEMMs for MoE Models: Works with both contiguous and masked layouts, improving speed for real-time inference.

Cost/Price

The article does not provide specific cost or pricing information for DeepGEMM.

Funding

The article does not mention any funding details for DeepGEMM.

Reviews/Testimonials

DeepGEMM has shown impressive results in performance benchmarks. It achieves a 1.4x speedup over CUTLASS-based implementations for normal GEMMs and a 1.2x speedup for grouped GEMMs, demonstrating consistent speedup across various configurations.

Comments

Please log in to post a comment.