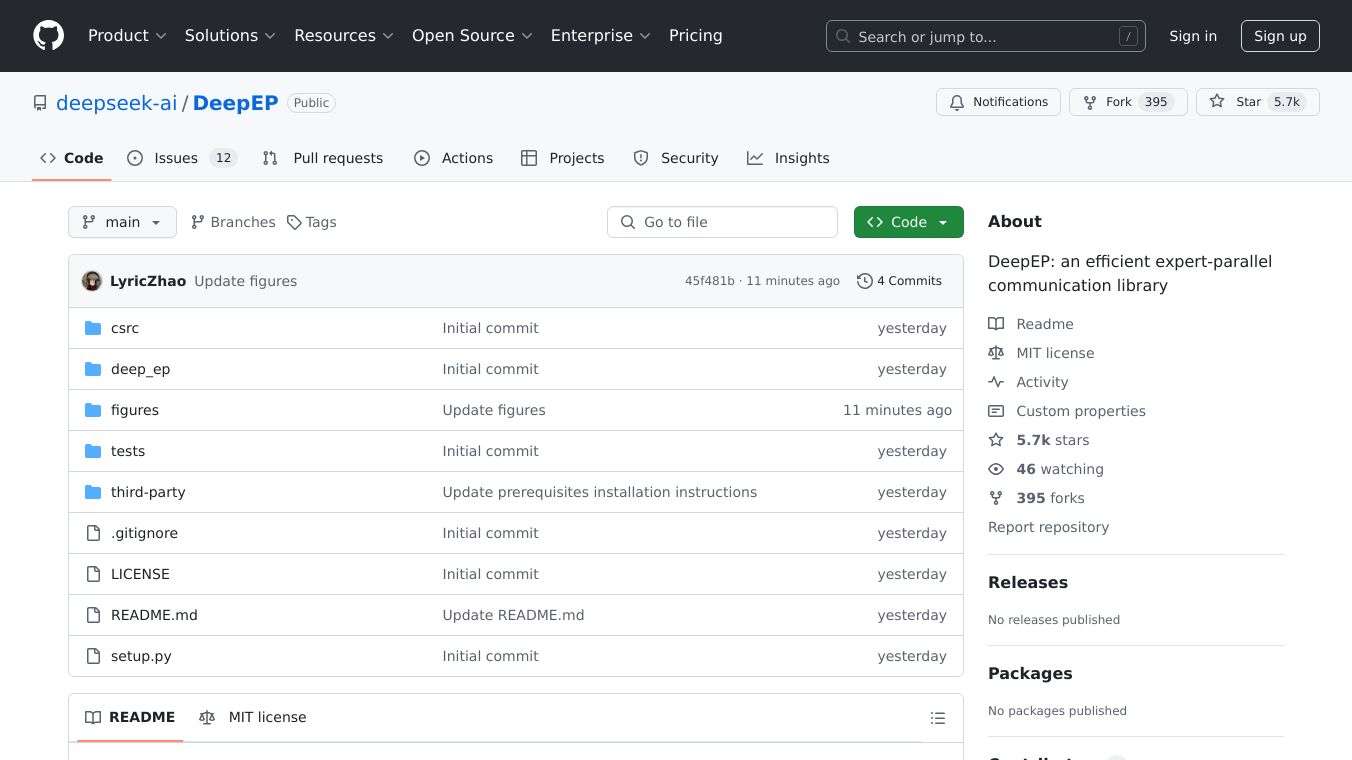

DeepEP

DeepSeek AI presents DeepEP, a handy tool for Mixture of Experts (MoE) models. It boosts efficiency in sending and gathering tokens across GPUs, perfect for both learning and using phases.

Key Features

DeepEP has two main types of kernels:

Normal Kernels are awesome for high throughput tasks, like filling in before learning or using. These kernels use NVLink and RDMA tech for speedy data transfer.

Low Latency Kernels are super for tasks where quick responses matter, like when using the model. These kernels use RDMA and work well with small batches, offering quick responses.

Benefits

DeepEP provides high throughput and low latency, making GPU talks faster and more efficient. It supports low precision operations like FP8, helping save memory. DeepEP works well in single and multiple machine setups, ensuring great performance.

Use Cases

DeepEP shines in:

Real time Inference. Its low latency kernels give quick responses, ideal for apps that need real time data handling.

Large Scale Training. The high throughput kernels manage big data efficiently, speeding up learning.

Resource Limited Environments. FP8 support means it works even when memory is low.

Cost/Price

The cost of the product is not shared in the article.

Funding

The funding details of the product is not shared in the article.

Reviews/Testimonials

DeepEP became popular fast, with over 1000 stars on GitHub just 20 minutes after its announcement. This shows the excitement and approval from developers, highlighting its big impact on AI growth.

Comments

Please log in to post a comment.