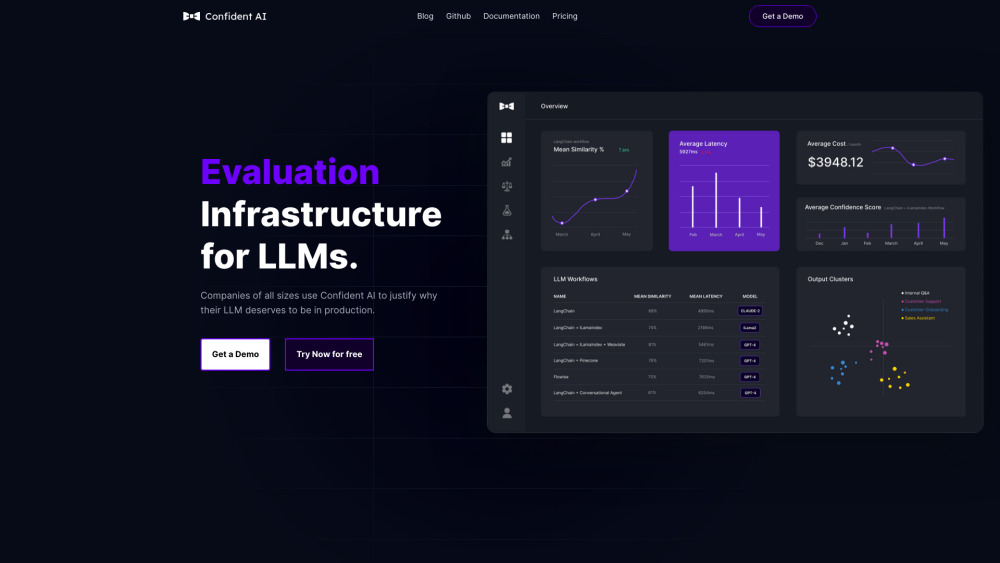

Confident AI

Confident AI is a comprehensive platform designed to assist developers in evaluating and testing Large Language Models (LLMs). It offers DeepEval, an open-source Python framework that simplifies the process of writing unit tests for LLMs, allowing developers to evaluate their models in just a few lines of code. The platform provides a range of features including A/B testing, ground truth evaluation, output classification, and detailed reporting dashboards, all aimed at helping AI engineers build more robust and reliable language models. With its centralized environment for tracking evaluation results, Confident AI enables faster iteration and more informed decision-making in LLM development.

DeepEval, the flagship tool of Confident AI, is particularly noteworthy for its simplicity and efficiency. It requires minimal coding knowledge, making it accessible to a broader range of developers. The platform's ability to detect breaking changes and reduce time to production is a significant boon for enterprises looking to deploy LLM solutions with confidence. Additionally, the detailed monitoring and reporting capabilities provide valuable insights that can optimize LLM applications and reduce operational costs.

Comments

Please log in to post a comment.