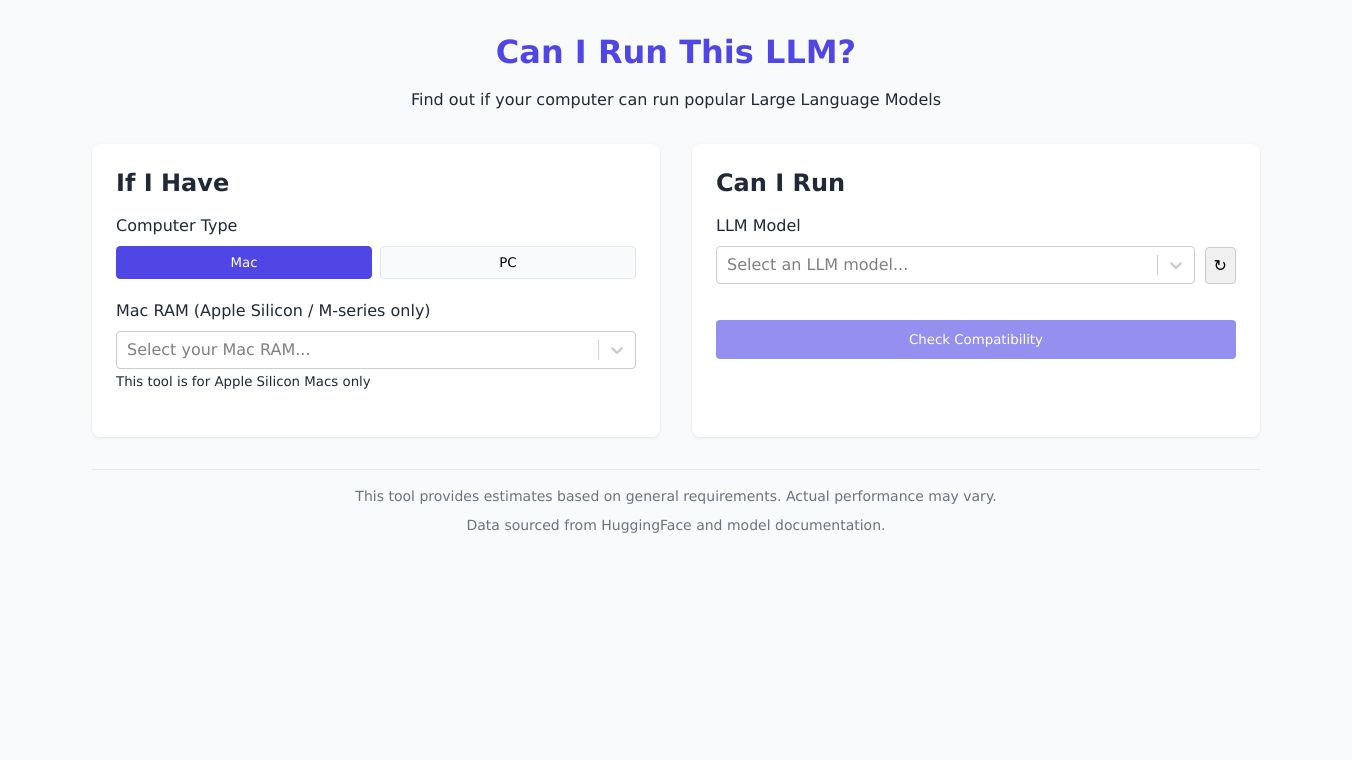

Can I Run This LLM ?

Vercel.app Can I Run This LLM?

Running Large Language Models (LLMs) on your device has many benefits. These include better privacy, working offline, more control over your data and models, and saving money. Let us look at the best tools for running LLMs on your device, highlighting their features, strengths, and weaknesses.

Key Features

AnythingLLM

AnythingLLM is an open-source AI app that lets you run LLMs on your desktop. It offers an easy way to chat with documents, run AI agents, and handle various AI tasks while keeping all data safe on your machine.

- Local processing system that keeps all data on your machine.

- Multi-model support framework connecting to various AI providers.

- Document analysis engine handling PDFs, Word files, and code.

- Built-in AI agents for task automation and web interaction.

- Developer API enabling custom integrations and extensions.

GPT4All

GPT4All runs large language models on your device, making sure that no data leaves your system. It offers access to over 1,000 open-source models and supports both CPU and GPU processing.

- Runs entirely on local hardware with no cloud connection needed.

- Access to 1,000+ open-source language models.

- Built-in document analysis through LocalDocs.

- Complete offline operation.

- Enterprise deployment tools and support.

Ollama

Ollama downloads, manages, and runs LLMs directly on your computer, creating an isolated environment for all model components. It supports multiple platforms and operating systems.

- Complete model management system for downloading and version control.

- Command line and visual interfaces for different work styles.

- Support for multiple platforms and operating systems.

- Isolated environments for each AI model.

- Direct integration with business systems.

LM Studio

LM Studio is a desktop application that lets you run AI language models on your computer. It acts as a complete AI workspace, supporting major model types and allowing you to chat with documents through RAG processing.

- Built-in model discovery and download from Hugging Face.

- OpenAI-compatible API server for local AI integration.

- Document chat capability with RAG processing.

- Complete offline operation with no data collection.

- Fine-grained model configuration options.

Jan

Jan provides a free, open-source alternative to ChatGPT that runs completely offline. It allows you to download popular AI models to run on your own computer or connect to cloud services when needed.

- Complete offline operation with local model running.

- OpenAI-compatible API through Cortex server.

- Support for both local and cloud AI models.

- Extension system for custom features.

- Multi-GPU support across major manufacturers.

Llamafile

Llamafile turns AI models into single executable files, allowing you to run AI without installation or setup. It supports direct GPU acceleration and cross-platform operation.

- Single-file deployment with no external dependencies.

- Built-in OpenAI API compatibility layer.

- Direct GPU acceleration for Apple, NVIDIA, and AMD.

- Cross-platform support for major operating systems.

- Runtime optimization for different CPU architectures.

NextChat

NextChat offers an open-source package that puts ChatGPT''s features into a web and desktop app that you control. It connects to multiple AI services while storing all data locally in your browser.

- Local data storage with no external tracking.

- Custom AI tool creation through Masks.

- Support for multiple AI providers and APIs.

- One-click deployment on Vercel.

- Built-in prompt library and templates.

Benefits

- Privacy Maintain complete control over your data, ensuring that sensitive information remains within your local environment and does not get transmitted to external servers.

- Offline Accessibility Use LLMs even without an internet connection, making them ideal for situations where connectivity is limited or unreliable.

- Customization Fine-tune models to align with specific tasks and preferences, optimizing performance for your unique use cases.

- Cost-Effectiveness Avoid recurring subscription fees associated with cloud-based solutions, potentially saving costs in the long run.

Use Cases

Running LLMs locally provides numerous benefits, including enhanced privacy, offline accessibility, and cost-effectiveness. Tools like AnythingLLM, GPT4All, Ollama, LM Studio, Jan, Llamafile, and NextChat offer robust solutions for running LLMs locally, each with its unique features and advantages. By leveraging these tools, users can maintain control over their data and customize models to meet their specific needs.

Comments

Please log in to post a comment.