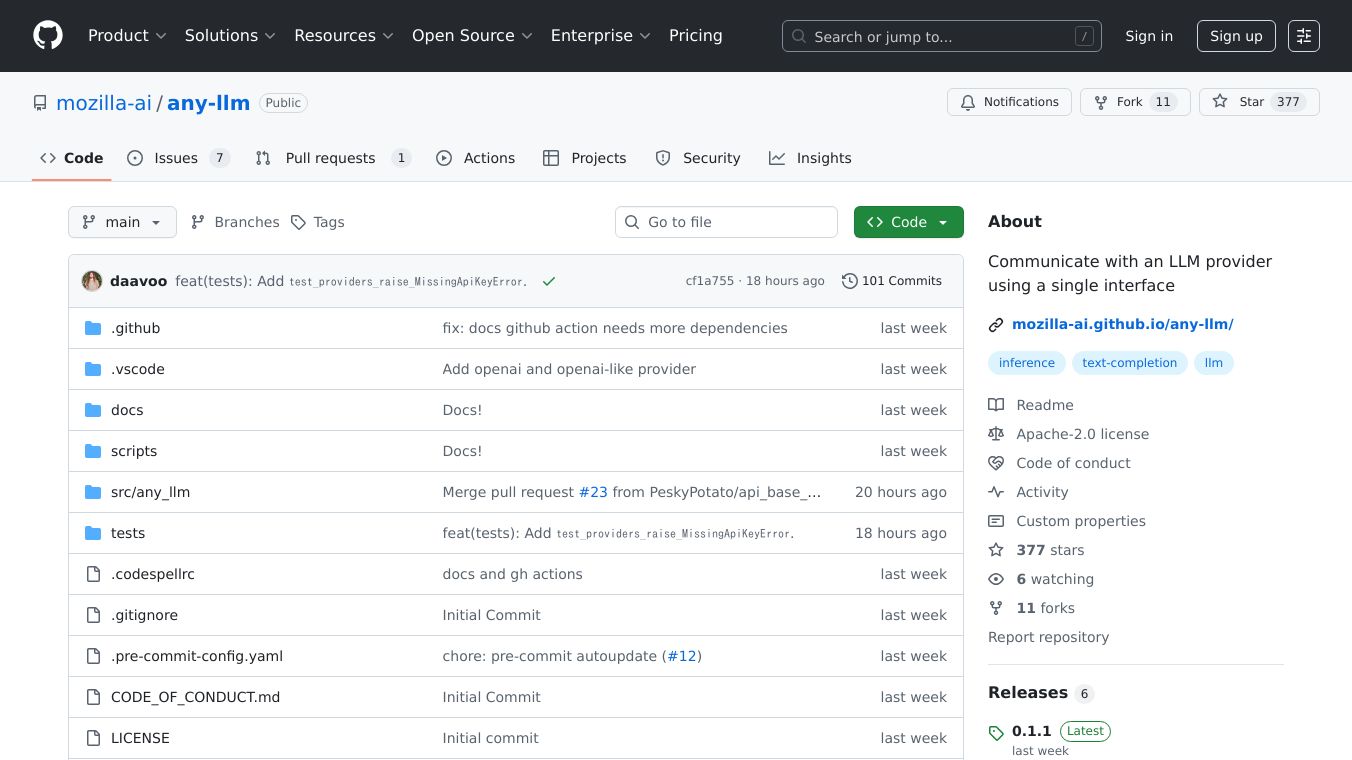

any-llm

any-llm is a Python library designed to simplify interactions with different Large Language Model (LLM) providers. It offers a unified interface that allows developers to communicate with various LLM providers seamlessly, addressing the fragmentation in the LLM provider ecosystem. By providing a single, consistent interface, any-llm makes it easier to switch between different models and providers with minimal changes to the code.

Benefits

any-llm offers several key benefits that make it a valuable tool for developers working with LLMs:

- Simple, Unified Interface: With any-llm, developers can use one function for all providers. Switching models or providers is as easy as changing a string, making it a flexible and efficient solution.

- Developer-Friendly: The library includes full type hints for better IDE support and clear, actionable error messages, helping developers to write and debug code more effectively.

- Leverages Official Provider SDKs: By using official provider SDKs, any-llm reduces the maintenance burden and ensures compatibility with the latest features and updates from providers.

- Framework-Agnostic: any-llm can be used across different projects and use cases, making it a versatile tool for any development environment.

- Actively Maintained: Used in Mozilla's own products, any-llm is actively maintained, ensuring continued support and updates.

- No Proxy or Gateway Server Required: any-llm eliminates the need to set up additional services to communicate with LLM providers, simplifying the development process.

Use Cases

any-llm is useful in various scenarios where developers need to interact with multiple LLM providers. Some common use cases include:

- Developing AI Applications: any-llm can be used to build AI applications that require interactions with different LLM providers, such as chatbots, virtual assistants, and content generation tools.

- Research and Experimentation: Researchers and developers can use any-llm to experiment with different models and providers, comparing their performance and suitability for specific tasks.

- Integration with Existing Systems: any-llm can be integrated into existing systems and applications, allowing developers to leverage the power of LLMs without significant changes to their codebase.

Quickstart

Requirements

- Python 3.11 or newer

- API_KEYS to access the LLM you choose to use.

Installation

In your pip install, include the provider that you plan on using, or use thealloption if you want to install support for all any-llm supported providers.

pip install 'any-llm-sdk[mistral,ollama]'Make sure you have the appropriate API key environment variable set for your provider. Alternatively, you could use theapi_keyparameter when making a completion call instead of setting an environment variable.

export MISTRAL_API_KEY="YOUR_KEY_HERE" # or OPENAI_API_KEY, etcBasic Usage

The provider_id key of the model should be specified according to the provider's documentation. Themodel_idportion is passed directly to the provider internals. To understand what model ids are available for a provider, you will need to refer to the provider documentation.

from any_llm import completionimport os# Make sure you have the appropriate environment variable setassert os.environ.get('MISTRAL_API_KEY')# Basic completionresponse = completion(model="mistral/mistral-small-latest", # <provider_id>/<model_id>messages=[{"role": "user", "content": "Hello!"}])print(response.choices[0].message.content)

Comments

Please log in to post a comment.